Add Annotations

Upload existing annotations or create new labels using the visual annotator.

Step 3 of 3: Add AnnotationsPart of the dataset preparation quickstart. Next: Train your model.

Annotations teach your VLM what to detect or understand. Upload existing annotations or create new ones using our visual annotator.

⏱️ Time: ~5-10 minutes (varies by dataset)

Need detailed guidance?This is a quickstart overview. For comprehensive annotation guides:

- Upload annotations guide — All formats and requirements

- Annotate for Phrase Grounding — Object detection

- Annotate for VQA — Question answering

- AI-assisted tools — Speed up annotation

Choose your method

Upload existing annotations

Best for: When you already have annotated data in COCO, YOLO, or other formats

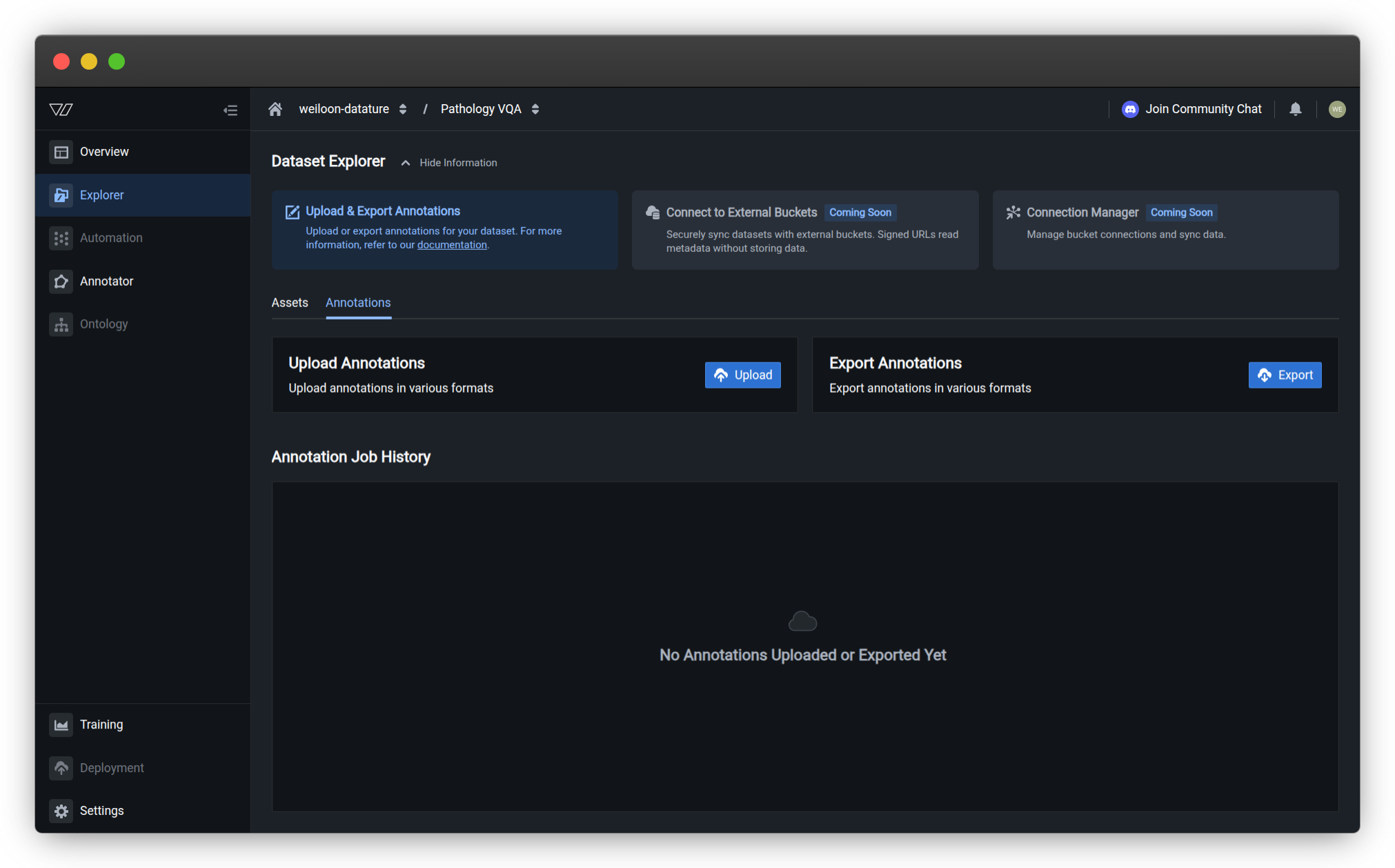

- From your dataset Explorer page, click the Annotations tab

- Click Upload in the Upload Annotations section

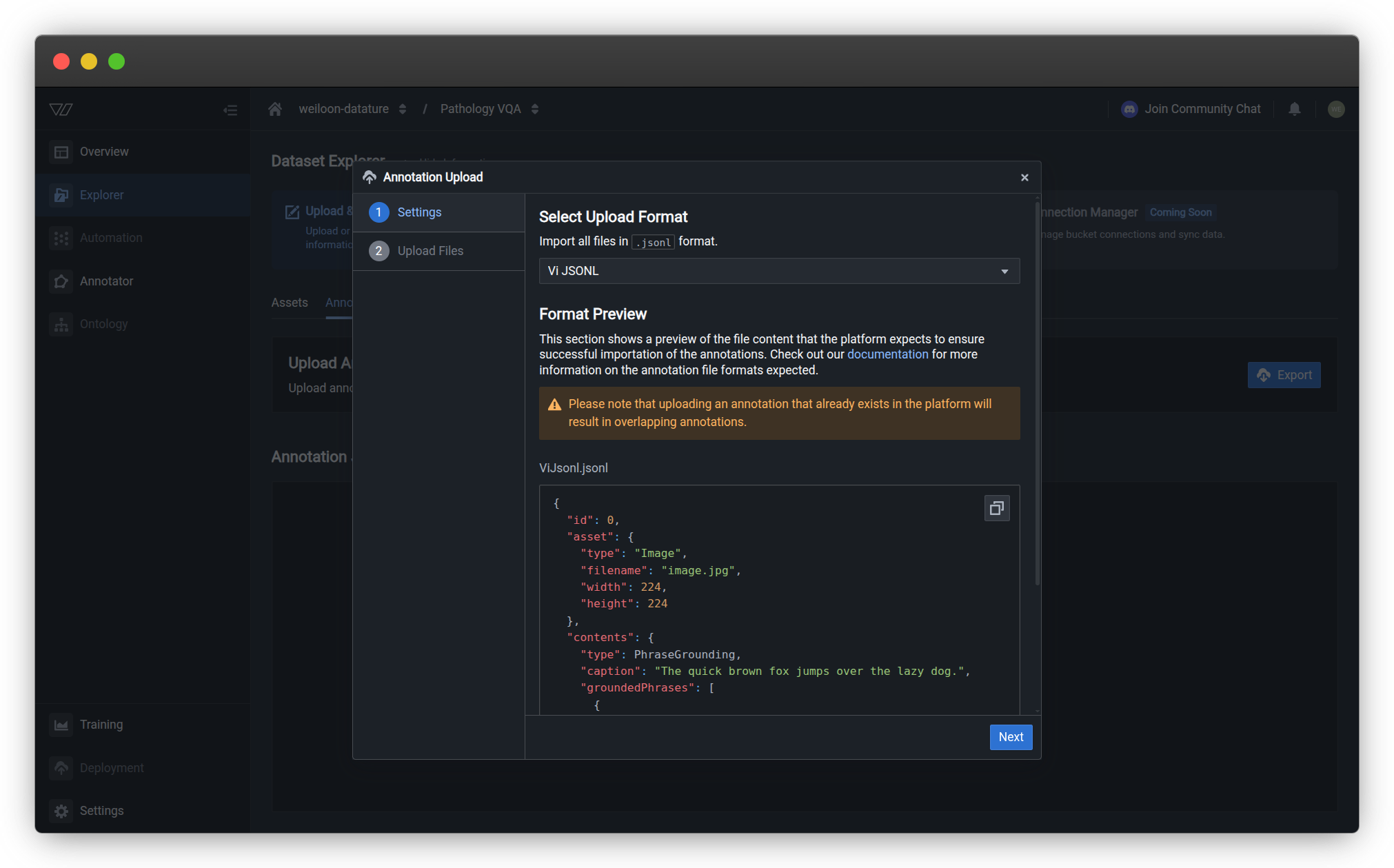

- Select your annotation format

Supported formats:

- Phrase Grounding: Vi JSONL, COCO, Pascal VOC, YOLO Darknet, YOLO Keras PyTorch, CSV Four Corner, CSV Width Height

- Visual Question Answering: Vi JSONL only

- Freeform: Vi JSONL (coming soon)

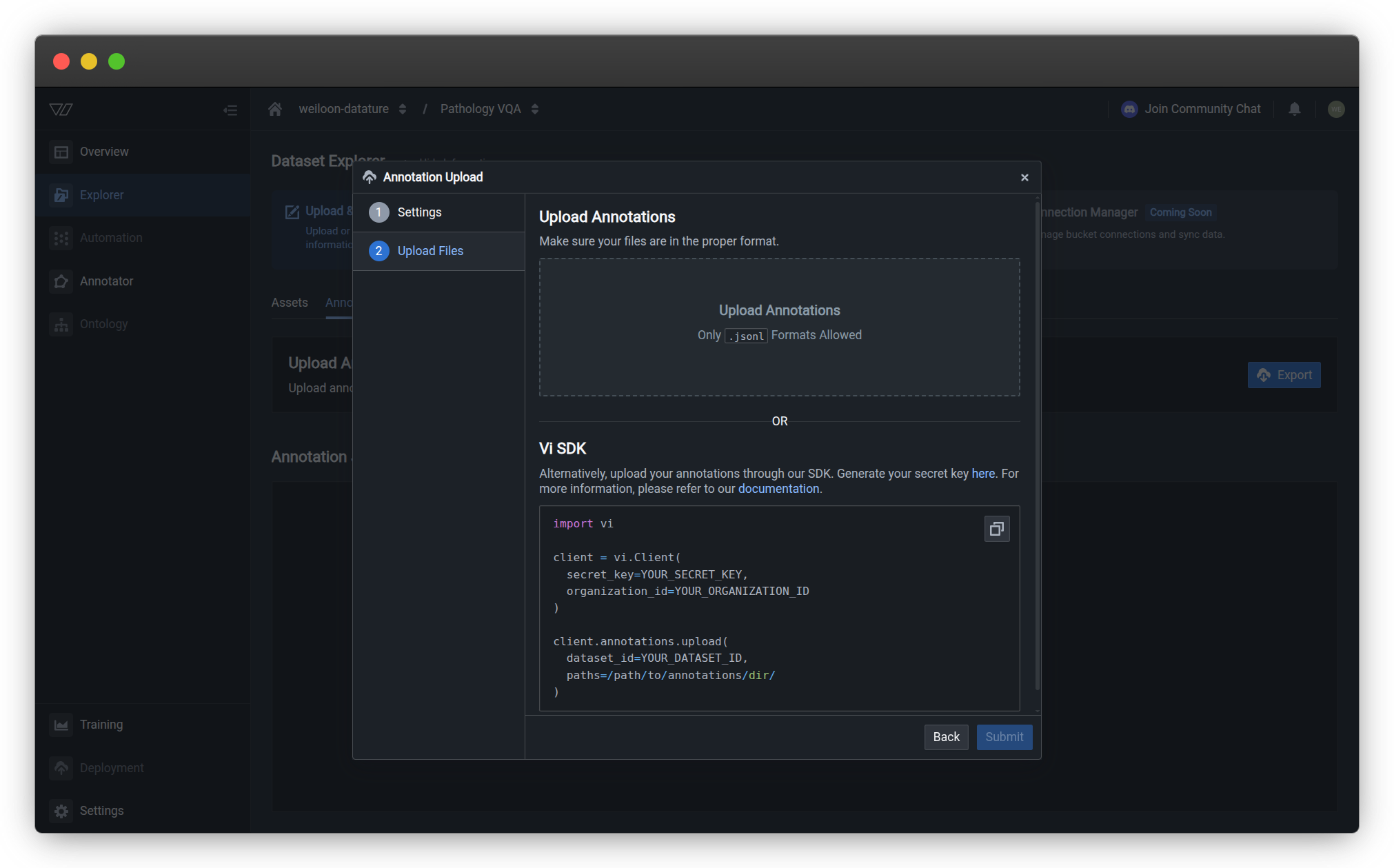

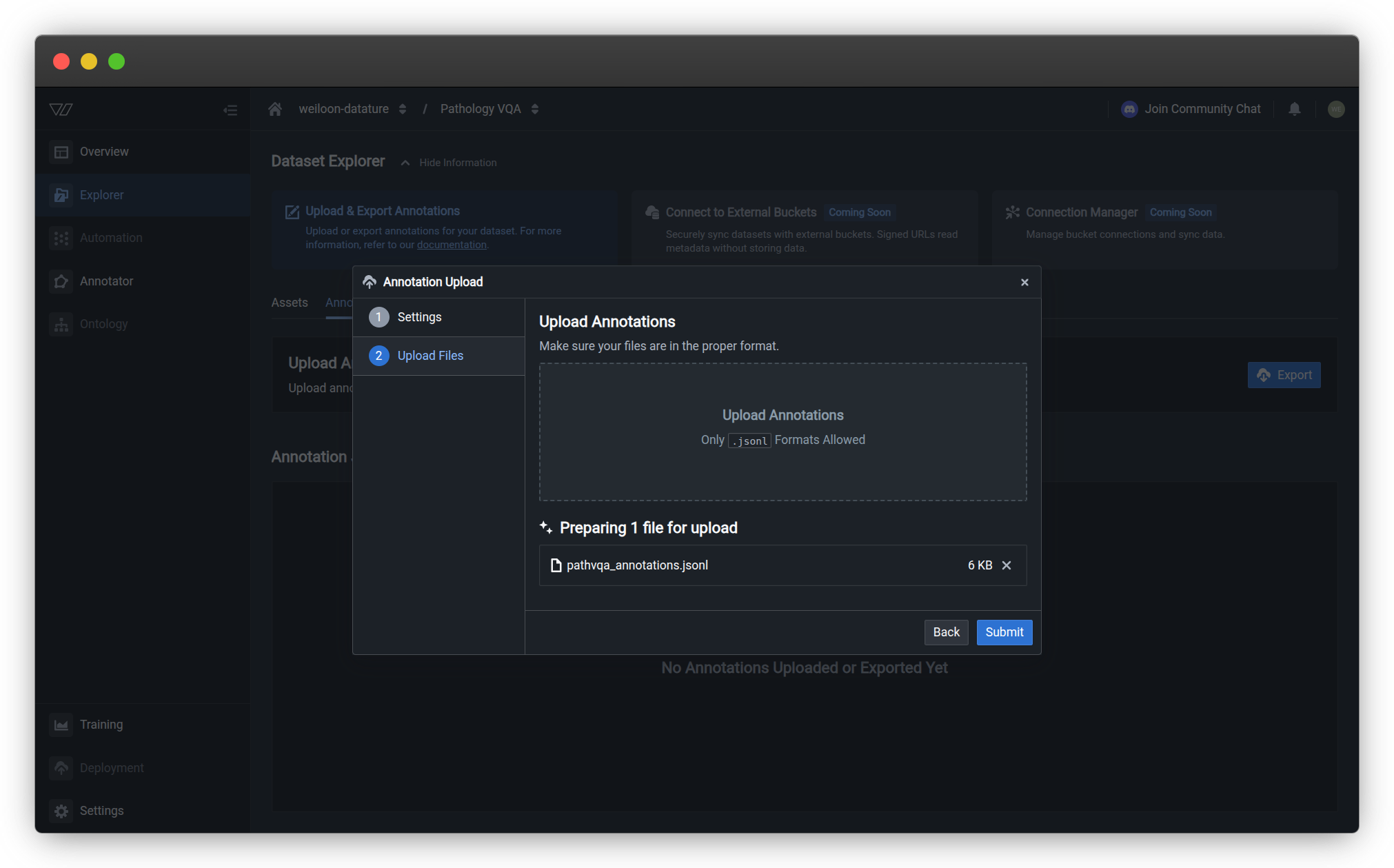

- Upload your annotation files

ImportantAnnotation files must reference images by filename. Ensure filenames match exactly.

Common questions

How many annotations do I need?

Minimum: 20-50 annotations for basic training

Recommended: 100+ annotations for better results

More annotations = better model performance.

Can I add annotations later?

Yes! You can:

- Upload more annotations anytime

- Continue labeling images after starting training

- Update existing annotations

Training runs use a snapshot of your data at training time.

What if I don't have annotations yet?

No problem! You have options:

- Create them now — Use the annotator (takes more time but gives you full control)

- Use AI assistance — Speed up annotation with AI-powered tools

- Start with zero-shot — Some VLMs can work without annotations (lower accuracy)

What's next?

Dataset ready!Your images are annotated and ready for training.

Create a training workflow, configure your VLM, and start fine-tuning your model.

Need help?

We're here to support your VLMOps journey. Reach out through any of these channels:

Updated about 1 month ago