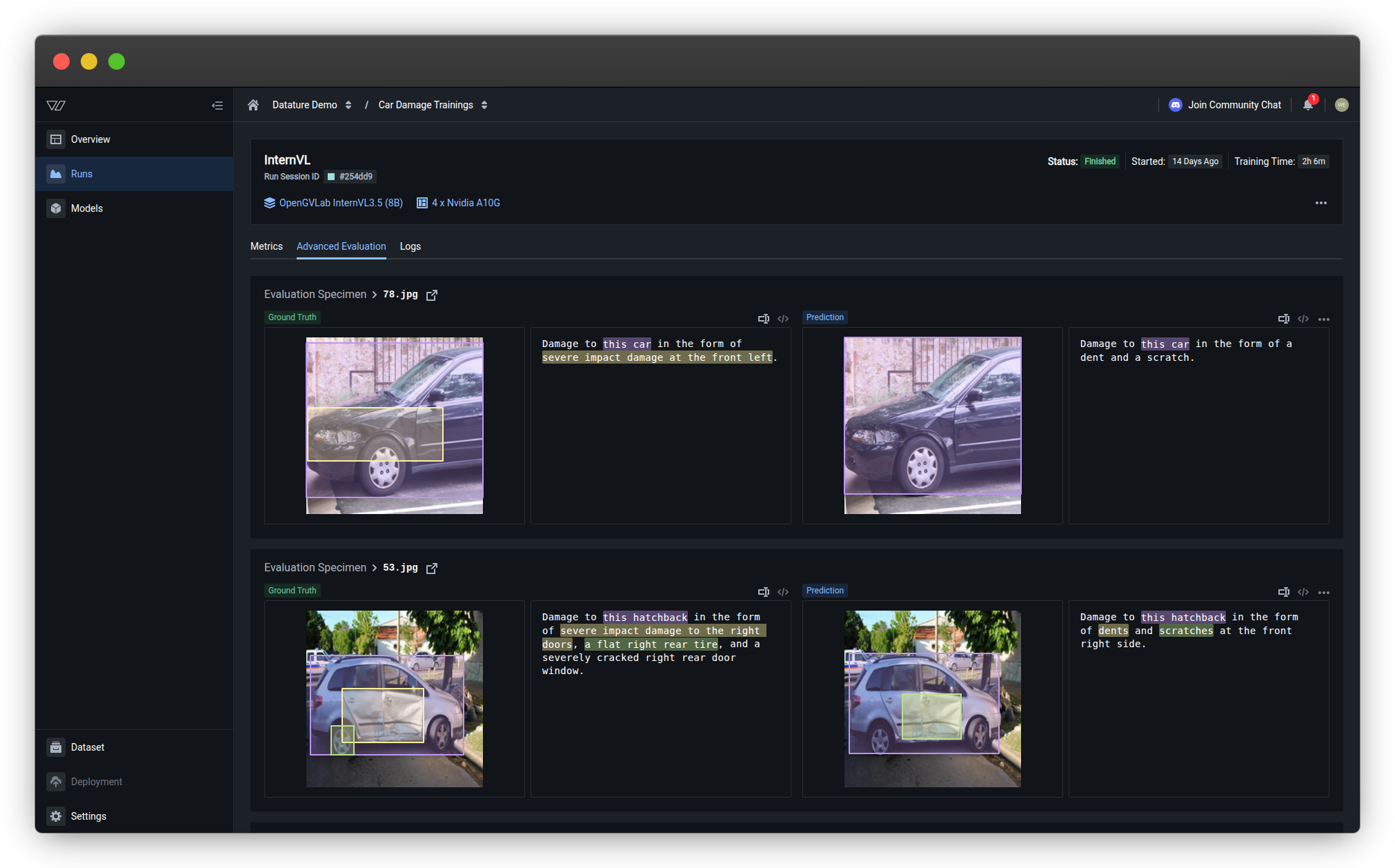

Advanced Evaluation

Compare ground truth annotations with model predictions side-by-side across training checkpoints.

The Advanced Evaluation tab provides visual inspection of model predictions compared to ground truth annotations. Use this to qualitatively assess model performance and identify specific failure modes.

Access Advanced EvaluationOpen any completed training run and click the Advanced Evaluation tab to view side-by-side comparisons of predictions.

Side-by-side comparison

Each evaluation specimen displays:

- Left panel (Ground Truth) — Your original annotations from the dataset

- Right panel (Prediction) — Model's predicted annotations or generated text

- Specimen identifier — Filename of the evaluation image

Navigate evaluation specimens

Use the image list to explore different validation examples:

- Scroll through the list of evaluation specimens

- Click any specimen to view its comparison

- Review both ground truth and predictions for that image

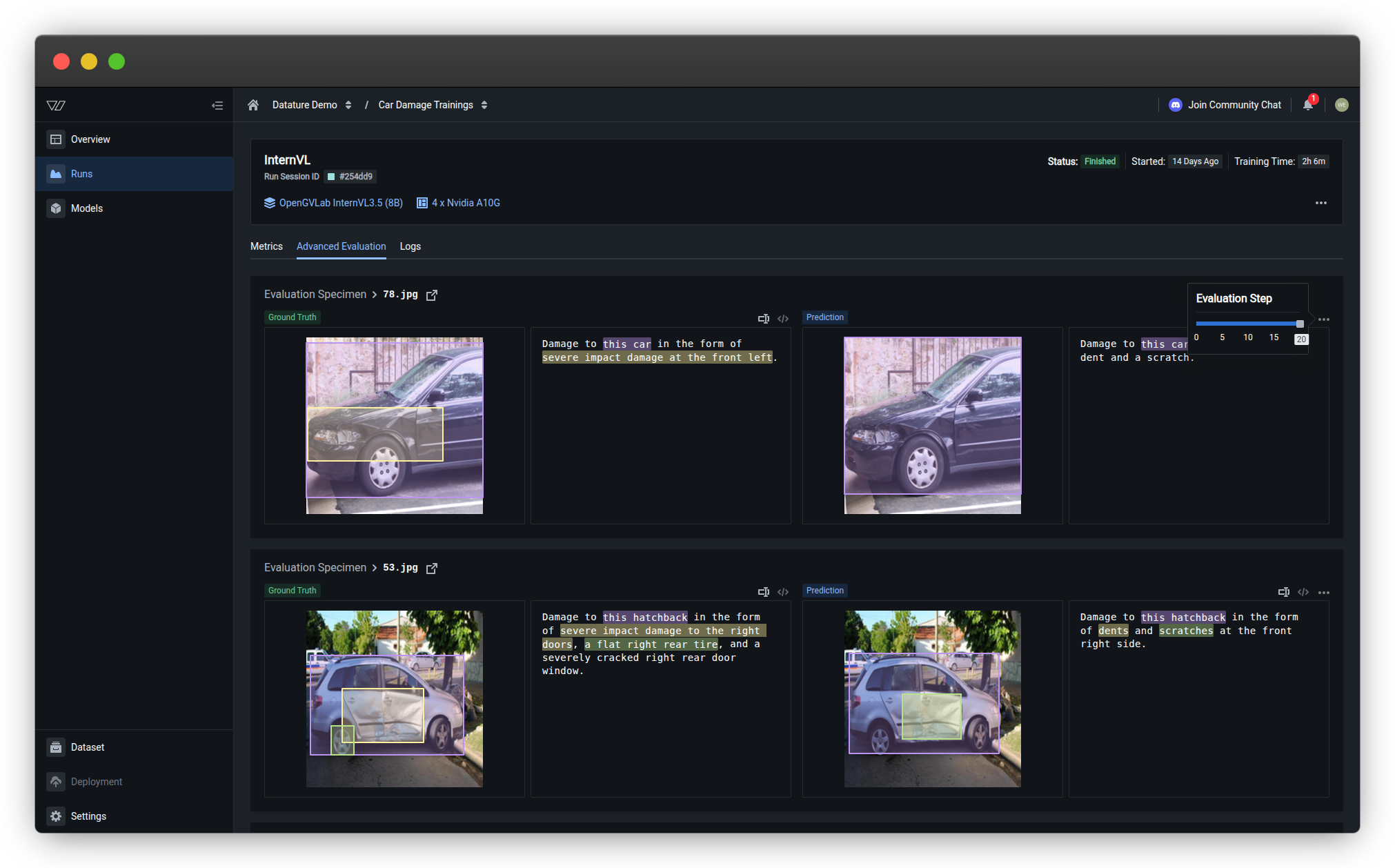

Scroll through evaluation checkpoints

Training saves evaluation results at multiple points during the run. Compare performance across different training stages to understand model improvement over time.

To navigate checkpoints:

- Click the three dots (•••) in the top-right corner of the Advanced Evaluation tab

- Use the Evaluation Step slider that appears

- Drag the slider to different checkpoint values (e.g., Step 0, 5, 10, 15, 20)

- Predictions update to show model performance at that training stage

What you'll see:

- Early checkpoints (Steps 0-5) — Initial predictions, often inaccurate or incomplete

- Middle checkpoints (Steps 5-15) — Progressive improvement as training continues

- Final checkpoints (Steps 15-20) — Mature model predictions at end of training

Understanding checkpoint intervals

What are evaluation checkpoints?

During training, the model periodically saves its state and runs predictions on validation data. Each saved state is a "checkpoint."

Checkpoint frequency:

Configured in Advanced Settings when launching the run:

- More frequent — More granular view of improvement (e.g., every 50 steps)

- Less frequent — Faster training with fewer evaluations (e.g., every 500 steps)

Step numbers:

Steps represent training iterations (not epochs). Each step processes one batch of data.

Example: 1,000 images ÷ 8 batch size = 125 steps per epoch

Interpretation guide

For phrase grounding tasks

What to look for:

✅ Good predictions:

- Bounding boxes tightly aligned with objects

- All objects in ground truth are detected

- Minimal false positives (boxes on non-objects)

- Consistent performance across different images

⚠️ Common issues:

- Loose boxes — Boxes much larger than objects (low IoU)

- Missed detections — Objects in ground truth but not predicted (low recall)

- False positives — Boxes on background or incorrect objects (low precision)

- Inconsistent localization — Some boxes accurate, others misaligned

Action items:

- Loose boxes → Add more training examples with tight annotations

- Missed detections → Increase training data for underrepresented classes

- False positives → Add negative examples (images without target objects)

- Inconsistent performance → Improve annotation consistency and data diversity

For VQA tasks

What to look for:

✅ Good predictions:

- Generated text matches ground truth meaning (even if wording differs)

- Answers are factually correct based on image content

- Responses are concise and well-formatted

- Model correctly handles edge cases (ambiguity, uncertain answers)

⚠️ Common issues:

- Hallucinations — Model invents details not visible in image

- Incomplete answers — Missing key information from ground truth

- Format errors — Wrong answer structure (e.g., verbose when concise expected)

- Ambiguity handling — Incorrect confidence in uncertain situations

Action items:

- Hallucinations → Add more diverse training data; refine system prompt for grounded responses

- Incomplete answers → Provide examples of complete answers in system prompt

- Format errors → Clarify answer format expectations in system prompt

- Ambiguity issues → Add training examples demonstrating uncertainty handling

Related resources

- View Metrics — Quantitative performance measurements

- Check Logs — Debug errors and troubleshoot failures

- Monitor a Run — Track progress and understand run statuses

- Evaluate a Model — Complete evaluation guide

- Train a Model — Configure and launch training

Need help?

We're here to support your VLMOps journey. Reach out through any of these channels:

Updated about 1 month ago