Kill a Run

Stop active training runs before completion to free resources or abandon incorrect configurations.

Kill active training runs to stop them immediately when you need to free GPU resources, fix configuration errors, or abandon a training session. Killing is permanent—training cannot be resumed from where it stopped.

Killing is permanent

- Cannot resume — Killed runs cannot be restarted from their current progress

- Credits consumed — Compute Credits for elapsed training time are not refunded

- State saved — Training progress up to kill point is preserved for review

- Must kill before delete — Active runs must be killed before you can delete them

When to kill a training run

Consider killing active training runs in these situations:

Configuration errors

- Wrong dataset selected — Training on incorrect data

- Incorrect model architecture — Need different VLM size or type

- Invalid training parameters — Batch size, learning rate, or other settings wrong

- Wrong system prompt — Prompt doesn't match intended task

Best practice: Kill immediately to avoid wasting Compute Credits on misconfigured training.

Training issues

- Out of memory errors — GPU cannot handle current configuration

- Loss not decreasing — Model not learning after several epochs

- Unexpected behavior — Metrics showing anomalous values (NaN, infinite)

- Training stuck — Progress not advancing for extended period

Best practice: Monitor runs closely during early stages to catch issues quickly.

Resource management

- Need GPU for higher priority work — Free resources for important training

- Testing workflow configurations — Quick experiments to validate settings

- Wrong localization region — Want to train in different geographic region

- Cost optimization — Preserve credits when training isn't critical

Workflow changes

- Better dataset available — Want to train on improved or expanded data

- Model update needed — Newer base model released

- Adjusted training strategy — Realized better approach mid-training

Kill early to save creditsThe sooner you kill a misconfigured run, the more Compute Credits you preserve. Monitoring runs during the first 10-15 minutes helps catch issues before significant credit consumption.

Kill an active run

Stop a training run that is currently running, queued, or starting:

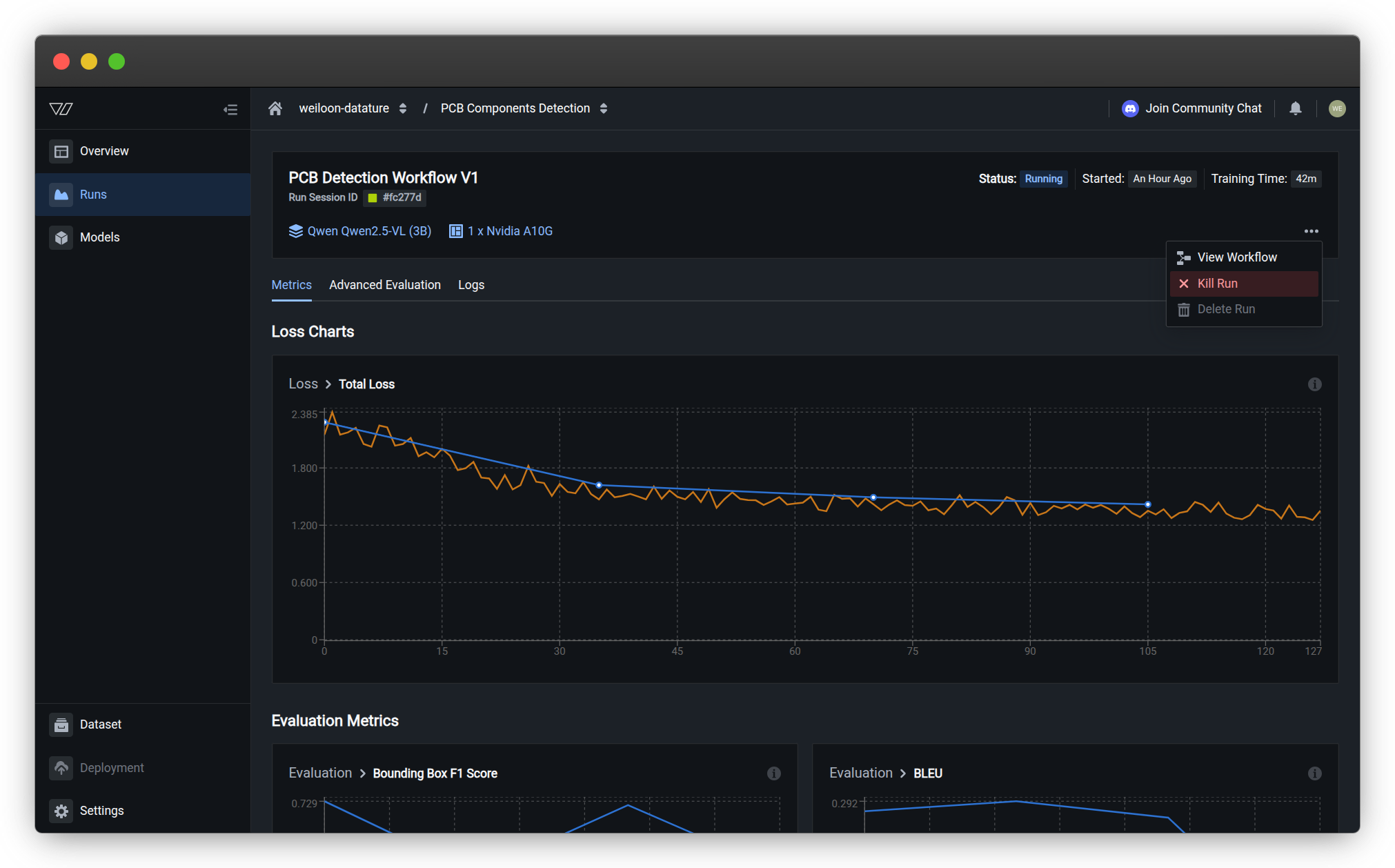

Access run actions

Navigate to the Training section from the sidebar

-

Click on your training project to open it

-

Click the Runs tab to view all training runs

-

Locate the active run you want to stop (status: Running, Queued, or Starting)

-

Click the three-dot menu (⋮) next to the run

-

Select Kill Run from the dropdown menu

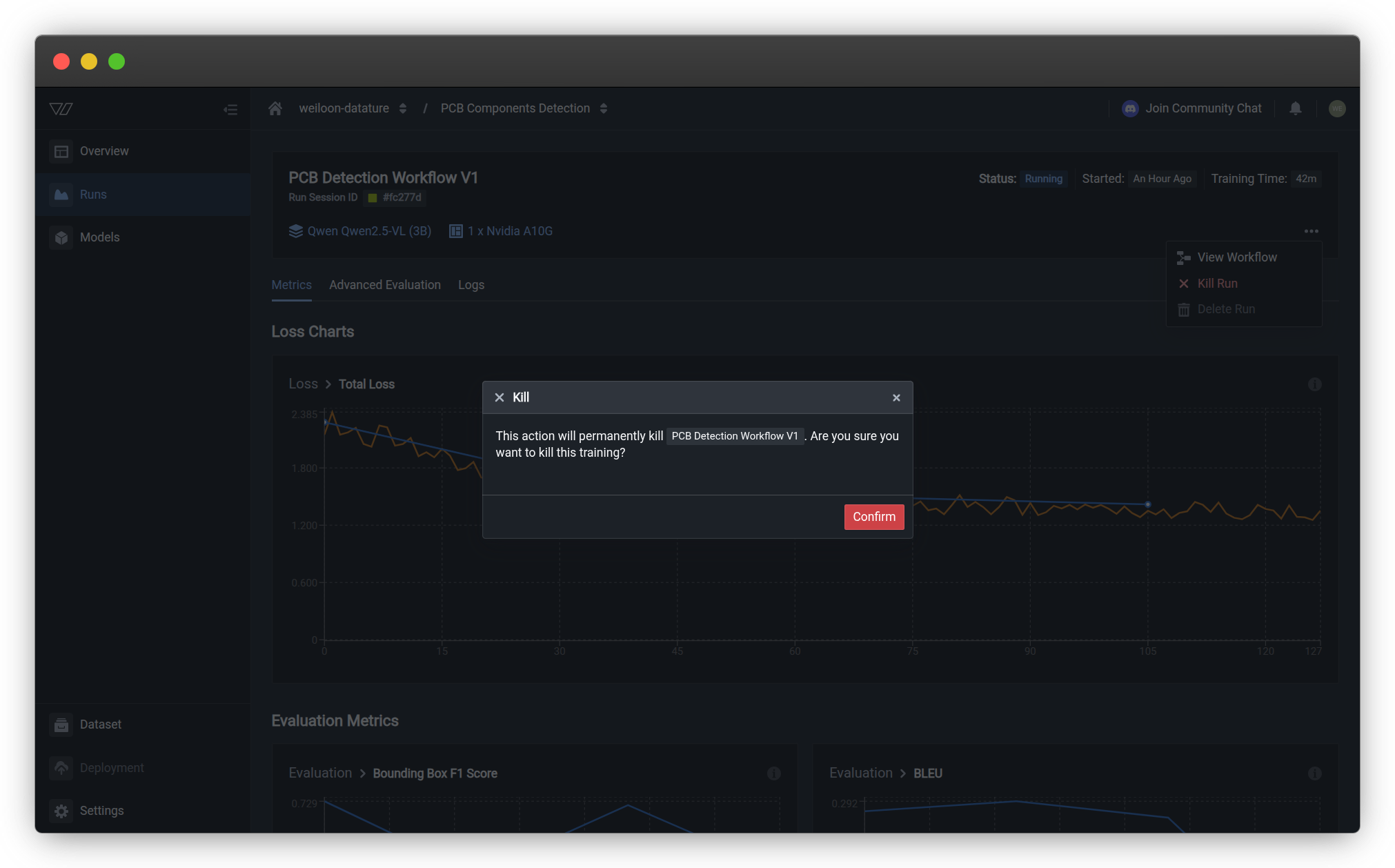

Confirm kill action

A confirmation dialog appears warning: "This action will permanently kill [Run Name]. Are you sure you want to kill this training?"

-

Review the warning carefully—killed runs cannot be resumed

-

Click the red Confirm button to kill the run immediately

-

The run status changes to "Killed" and training stops

Training stopped successfullyYour run is now killed and GPU resources are freed. You can:

- Review progress made before killing using metrics and logs

- Start a new run with corrected workflow settings

- Delete the killed run to clean up your project

What happens when you kill a run

Killing a training run triggers the following sequence:

Immediate effects

Training stops:

- GPU processing halts immediately

- No further training epochs execute

- Model training progress freezes at current point

Resources freed:

- GPU allocation released

- Queued runs can start if waiting for resources

- System capacity becomes available

Status updates:

- Run status changes from "Running" to "Killed"

- Timestamps record when run was killed

- Progress indicators show final state before kill

Data preservation

Saved for review:

- Training progress up to kill point remains visible

- Metrics and loss charts show progress before stopping

- Logs contain full training output until kill

- Configuration settings from workflow remain accessible

Not available:

- Trained model — Incomplete training produces no usable model

- Final metrics — Evaluation incomplete

- Continued training — Cannot resume from killed state

Credit consumption

Compute Credits charged:

- All GPU time from run start until killed

- Validation cycles that completed before kill

- Overhead time for setup and processing

Credits not charged:

- Remaining estimated training time

- Queue time waiting for resources

- Time after kill action

Example credit calculationScenario:

- Estimated total training: 60 minutes

- Killed after: 12 minutes of active training

- Estimated credits: 10 credits total

Credits consumed: ~2 credits (12 minutes of 60 minutes)

Credits saved: ~8 credits (remaining 48 minutes not executed)

After killing a run

Once you've killed a training run, choose your next action:

Review what went wrong

Understand why training needed to be killed:

- Check logs for error messages or warnings

- Review metrics to see if training was progressing

- Examine configuration in workflow settings

- Identify root cause of issues requiring kill

Fix and restart training

Correct configuration issues and start fresh:

-

Update workflow settings to fix identified problems

- Adjust model architecture if memory issues

- Fix dataset selection if wrong data

- Modify training parameters if needed

- Update system prompt if incorrect

-

Start a new run with corrected configuration

-

Monitor the new run carefully during initial stages

Clean up killed run

Remove the killed run after extracting useful information:

-

Document lessons learned from failure

-

Save any useful metrics or configuration details

-

Delete the killed run to keep project organized

Killed runs must be deleted manuallyUnlike some platforms, Datature Vi does not automatically delete killed runs. They remain in your project until you explicitly delete them.

This preserves training history for debugging and learning purposes.

Cannot kill completed runs

You can only kill runs that are actively executing or waiting to execute. Completed runs cannot be killed.

Which runs can be killed

Killable statuses:

- Running — Actively training on GPU

- Queued — Waiting for GPU resources to become available

- Starting — Allocating resources and preparing environment

Which runs cannot be killed

Non-killable statuses:

- Completed — Training finished successfully

- Failed — Training stopped due to errors

- Killed — Already killed (obviously)

For completed runs:

- Delete them if you no longer need them

- Download models from successful completions before deletion

- Review metrics to understand results

Best practices

Monitor initial stages before killing

Before deciding to kill a run, verify it's actually problematic:

- Monitor progress for at least 5-10 minutes

- Check if stages advancing through preprocessing and setup

- Review logs for actual errors vs. normal warnings

- Wait for first metrics to appear if training just started

Don't kill prematurely:

- Initial stages can take several minutes

- Some warnings are normal and don't indicate failure

- First epoch often slower than subsequent epochs

Do kill immediately if:

- Clear configuration errors visible

- Out of memory errors appearing

- Training stuck at same point for 15+ minutes

- Wrong workflow started accidentally

Document why runs were killed

Keep notes on killed runs to avoid repeating mistakes:

Record:

- What went wrong (configuration error, resource issue, etc.)

- Why you killed it instead of letting it complete

- What you changed before restarting

- Lessons learned for future training

Benefits:

- Avoid repeating same configuration errors

- Track patterns in training issues

- Share knowledge with team members

- Improve workflow configurations over time

Fix root causes before restarting

Don't immediately restart after killing—fix underlying issues first:

Configuration problems:

- Update workflow settings with corrections

- Test configuration with shorter training if possible

- Verify dataset is correct and accessible

- Review model compatibility with your task

Resource problems:

- Reduce batch size if out of memory

- Select smaller model if architecture too large

- Resize images in dataset if too high resolution

- Check credit availability before long training

Validation:

- Test with minimal epochs (e.g., 5 epochs) to validate fixes

- Scale up gradually after confirming setup works

- Monitor closely during initial restart

Use kill for quick experiments

Killing is useful for rapid workflow testing:

Strategy:

- Start training with test configuration

- Monitor first few minutes to verify setup correct

- Kill after validation (don't need full training)

- Iterate quickly on configurations

Good for:

- Testing new dataset preparations

- Validating system prompt formats

- Checking model architecture compatibility

- Confirming training parameters don't cause errors

Not good for:

- Actual model training (obviously)

- Generating metrics for evaluation

- Producing deployable models

Consider credit costs before killing

Make informed decisions about whether to kill or continue:

Questions to ask:

-

How much training time elapsed?

- Early (<10 min): Minimal credits lost if kill

- Mid-training (30+ min): More credits invested

- Late (>80% complete): Consider letting finish

-

Is training clearly failing?

- Yes: Kill immediately regardless of time

- Maybe: Review logs and metrics to decide

- No: Let complete if making good progress

-

Can you fix issue mid-training?

- No: Kill and restart with fixes

- Yes but requires restart: Kill and fix

- Issue resolves itself: Continue training

General principle: Kill early when certain of problems, but let complete if training progressing acceptably even if not optimal.

Common questions

Can I resume a killed run?

No. Killed runs cannot be resumed or restarted from their stopped point.

Your options after killing:

- Start a new run — Begins fresh training from scratch

- Use same workflow — Reuses configuration from killed run

- Modify workflow — Update settings before starting new run

Training always starts from beginning:

- No checkpoint resumption from killed runs

- Each run is independent training session

- Progress from killed run cannot be continued

Workaround for long training:

- Configure workflows with shorter epoch counts

- Train incrementally with multiple runs

- Save intermediate results after each run

Will I get a refund for Compute Credits?

No. Compute Credits are consumed based on actual GPU time used, regardless of whether training completes.

What you're charged for:

- GPU time from run start until kill

- Validation and metric computation before kill

- Resource allocation and setup overhead

What you're not charged for:

- Remaining estimated training time not executed

- Queue time waiting for GPU resources

- Time after kill action completes

Why no refunds:

- GPU resources were allocated and used

- Training computations were actually executed

- Infrastructure costs incurred for running time

Credit savings:

- Killing early saves remaining training time credits

- Early detection of issues minimizes wasted credits

- Quick kills (<5 min) minimize lost credits

What if I killed the wrong run by accident?

Unfortunately, kills cannot be undone. Once you confirm the kill action:

- Training stops immediately and permanently

- Run cannot be resumed or restarted

- GPU resources are released

If you killed the wrong run:

- Start a new run immediately with same configuration

- Use same workflow to replicate settings exactly

- Monitor closely to ensure correct run this time

- Delete killed run after starting replacement

Prevention tips:

- Double-check run name in kill confirmation dialog

- Verify run status before killing (ensure it's the problematic one)

- Review run start time to confirm identity

- Read confirmation message carefully before clicking Confirm

In the future:

- Use descriptive workflow names for easy identification

- Review run details before accessing kill option

- Take a moment to verify before confirming kill

Can I kill multiple runs at once?

Currently, runs must be killed one at a time through the interface. There is no bulk kill functionality.

To kill multiple runs:

- Navigate to the Runs tab

- For each run you want to stop:

- Click the three-dot menu (⋮)

- Select Kill Run

- Confirm the action

- Repeat for each active run

Use cases for killing multiple runs:

- Wrong workflow configurations applied to multiple runs

- Need to free all GPU resources immediately

- Testing scenarios where all test runs should be stopped

Efficiency tip: Start from newest to oldest runs to ensure most recent (likely higher priority) runs are addressed first.

How long does killing take?

Killing is nearly instantaneous in most cases:

Typical sequence:

- You click Confirm in kill dialog

- Platform sends stop signal to training process (< 1 second)

- GPU processing halts immediately

- Status updates to "Killed" (< 5 seconds)

- Resources freed for other uses

Possible delays:

- Training in middle of saving checkpoint: Wait for save to complete (< 30 seconds)

- Validation cycle in progress: Allow validation to finish (< 1 minute)

- Infrastructure communication delays: Rare, usually resolve within 1-2 minutes

If kill seems stuck:

- Refresh the page to see updated status

- Check the Logs tab for kill confirmation messages

- Wait 2-3 minutes for infrastructure to process

- Contact support if run still shows "Running" after 5 minutes

Resource availability:

- GPU resources usually available for new runs within 1-2 minutes after kill

- Queued runs start automatically once resources free up

Will killing affect other concurrent runs?

No. Killing one run does not affect other active training runs:

Each run is isolated:

- Runs use dedicated GPU allocations

- Training processes are independent

- Killing one run doesn't impact others

Benefits:

- Kill problematic runs without disrupting successful training

- Free resources for specific high-priority runs

- Test configurations independently

Resource reallocation:

- GPU freed from killed run becomes available

- Queued runs may start using freed resources

- Other active runs continue unaffected

Project organization:

- Runs in different workflows completely independent

- Runs in same workflow don't depend on each other

- Project structure unaffected by kills

Should I kill or wait for a failing run to complete?

Kill immediately if:

- ❌ Configuration is clearly wrong (can't produce useful results)

- ❌ Out of memory errors occurring (won't complete anyway)

- ❌ Loss showing NaN or infinite values (training broken)

- ❌ You need the GPU resources for something else urgent

Let complete if:

- ✅ Training progressing slowly but correctly

- ✅ Metrics improving even if suboptimal

- ✅ Near completion (>80% done) with acceptable progress

- ✅ Want to analyze results before deciding next steps

Consider carefully if:

- ⚠️ Mid-training with significant credits invested

- ⚠️ Training isn't optimal but producing some results

- ⚠️ Unsure if issue is temporary or permanent

- ⚠️ Want to see what metrics look like at completion

Decision framework:

- Review logs for error severity

- Check metrics for improvement trends

- Calculate credits consumed vs. remaining

- Assess if results would be usable even if suboptimal

General principle: Kill early and decisively when certain of failure; let complete if there's value in seeing final results.

Next steps

Remove killed or completed runs from your project

Track training progress and watch for issues

Start a new training run with corrected settings

Debug issues using detailed training logs

Related resources

- Manage Runs — Complete run management overview

- Monitor a Run — Track training progress in real-time

- Delete a Run — Clean up completed or killed runs

- Train a Model — Start new training sessions

- Training Logs — Detailed debugging information

- Resource Usage — Monitor Compute Credits

- Manage Workflows — Update training configurations

Need help?

We're here to support your VLMOps journey. Reach out through any of these channels:

Updated about 1 month ago