Model Settings

Configure model architecture, training parameters, and inference settings for optimal VLM performance.

Model settings

Model settings define the architecture, training approach, and inference behavior of your VLM. Proper configuration of these settings directly impacts training speed, memory usage, model quality, and inference performance.

Looking for a quick start?For streamlined workflow setup with recommended default settings:

PrerequisitesBefore configuring model settings, ensure you have:

- An existing training project

- A configured dataset with train-test split

- Basic understanding of your computational resources and training goals

Understanding model settings

Model settings are organized into three main categories:

- Model Options — Architecture size, training mode, and memory optimization

- Hyperparameters — Training behavior and convergence settings

- Evaluation — Inference behavior and output generation

These settings work together to define:

- Training efficiency — How fast your model trains and how much memory it requires

- Model capacity — The model's ability to learn complex patterns

- Convergence behavior — How the model improves during training

- Inference quality — Output diversity, length, and coherence

Model options

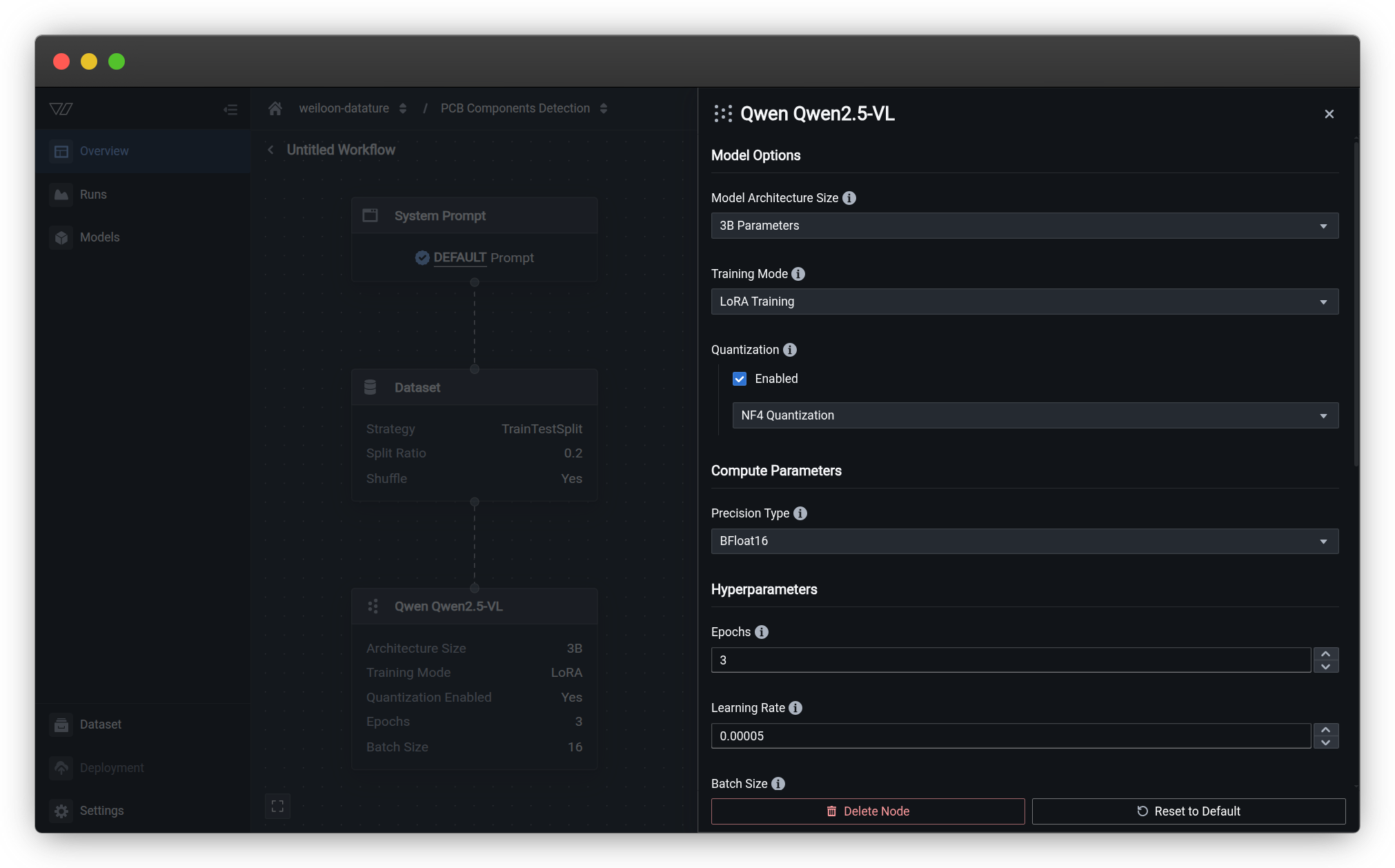

Access model configuration by clicking the Model node in the workflow canvas.

Model architecture size

The number of parameters in the neural network, measured in billions (B). This fundamental setting determines the model's capacity to learn and represent complex patterns.

Available sizes:

| Size | Parameters | Best for | Memory requirements |

|---|---|---|---|

| Small | 1-3B | Quick experiments, limited resources | 8-16 GB GPU |

| Medium | 7-13B | Standard production use cases | 16-32 GB GPU |

| Large | 20-34B | Complex tasks, high accuracy needs | 40-80 GB GPU |

How it impacts training:

- Larger models have more capacity to learn complex patterns and nuanced relationships

- Smaller models train faster and require less computational resources

- Memory usage scales roughly linearly with parameter count

Choosing the right sizeStart with smaller models for initial experiments to validate your approach quickly. Scale up to larger models when you need higher accuracy or have proven the concept works with your data.

When to use each size:

Small models (1-3B parameters)

Best for:

- Initial prototyping and experimentation

- Limited GPU resources (single consumer GPU)

- Fast iteration during development

- Simple tasks with clear visual patterns

- Real-time inference requirements

Tradeoffs:

- Lower capacity for complex reasoning

- May struggle with subtle distinctions

- Faster training and inference

- Lower memory and compute costs

Example use cases:

- Binary classification (good/defective)

- Single object detection

- Simple quality control

Medium models (7-13B parameters)

Best for:

- Production deployments

- Most standard computer vision tasks

- Multi-class detection and classification

- Balanced performance and resource usage

Tradeoffs:

- Good balance of accuracy and efficiency

- Reasonable training times

- Moderate GPU requirements

- Suitable for most use cases

Example use cases:

- Multi-object detection

- Complex defect classification

- Retail product recognition

- General-purpose visual understanding

Large models (20-34B parameters)

Best for:

- Maximum accuracy requirements

- Complex reasoning tasks

- Fine-grained distinctions

- Production systems with ample resources

Tradeoffs:

- Highest accuracy potential

- Significantly longer training times

- High GPU memory requirements

- May require distributed training

Example use cases:

- Medical image analysis

- Detailed inspection tasks

- Open-ended visual reasoning

- Challenges requiring nuanced understanding

Training mode

Training mode determines how the model's parameters are updated during training. This critical choice affects training speed, memory usage, and model flexibility.

Available modes:

LoRA Training

Trains only small adapter layers while keeping the base model frozen.

- How it works: Inserts small trainable layers (Low-Rank Adaptation) into the frozen base model

- Memory requirements: Low (only adapter gradients stored)

- Training time: Faster (fewer parameters to update)

- Flexibility: Good for most use cases, may be less flexible for drastically different tasks

Full Finetuning (SFT)

Updates all model parameters during training using Supervised Fine-Tuning.

- How it works: Every layer in the neural network is adjusted based on your training data

- Memory requirements: High (requires storing gradients for all parameters)

- Training time: Longer (more parameters to update)

- Flexibility: Maximum adaptation to your specific task

Recommendation: Start with LoRA TrainingLoRA Training is recommended for most use cases as it offers 2-3x faster training with significantly lower memory usage while maintaining comparable quality to Full Finetuning.

Comparison:

| Aspect | LoRA Training | Full Finetuning (SFT) |

|---|---|---|

| Memory usage | Low (3-5x reduction) | High |

| Training speed | Faster (2-3x speedup) | Slower |

| Adaptation flexibility | Good | Maximum |

| Best for | Most standard use cases | Drastically different tasks |

| GPU requirements | Consumer GPU sufficient | High-end GPU required |

When to use LoRA Training:

Standard use cases (recommended)

Use for most production applications:

- Standard computer vision tasks (detection, classification)

- Limited GPU resources

- Faster iteration during development

- Domain adaptation (e.g., retail → manufacturing)

- Cost-effective training

When to use Full Finetuning (SFT):

Maximum adaptation required

Use when your task is significantly different from the base model's training:

- Highly specialized domain (e.g., microscopy, satellite imagery)

- Novel visual patterns not seen in general training

- Maximum accuracy is critical and resources are available

- Task requires fundamental changes to feature extraction

Quantization

Quantization reduces model precision to save memory, enabling training of larger models on limited GPU resources. This technique uses lower-bit representations for model weights and activations.

Available formats:

NF4 (Normalized Float 4)

4-bit format optimized specifically for neural networks with normalized value distribution.

- Memory savings: ~4x reduction compared to full precision

- Quality: Excellent preservation of model quality

- Best for: Neural network training (recommended default)

- Designed for: Transformer models and VLMs

FP4 (4-bit Floating Point)

Standard 4-bit floating point quantization for weights.

- Memory savings: ~4x reduction compared to full precision

- Quality: Very good

- Best for: General quantization, compatibility scenarios

- Use when: NF4 has compatibility issues or specific FP4 requirements

Recommendation: NF4NF4 is recommended for VLM training as it's specifically optimized for transformer models, providing better quality preservation than FP4 with the same memory savings.

How quantization worksQuantization represents model weights using 4 bits instead of 16-bit or 32-bit precision, reducing memory usage by approximately 4x. This enables training larger models or using larger batch sizes with the same GPU memory.

Quantization formats compared:

| Format | Memory savings | Quality | Best for |

|---|---|---|---|

| NF4 | ~4x reduction | Excellent | Neural network training (recommended) |

| FP4 | ~4x reduction | Very good | General quantization, compatibility |

Impact example:

Without quantization (16-bit):

- 13B model: ~40 GB GPU memory

With NF4 quantization (4-bit):

- 13B model: ~12 GB GPU memory

- 3-4x memory reduction

- Minimal quality lossWhen to use each format:

NF4 (recommended)

Use for most scenarios:

- Standard VLM training workflows

- Production model training

- When you want best quality with quantization

- Recommended default choice

Advantages:

- Optimized value distribution for neural network weights

- Better preservation of model quality than FP4

- Specifically designed for transformer architectures

- Minimal accuracy loss compared to full precision

- Industry best practice for VLM training

FP4

Use when:

- Compatibility issues with NF4

- Debugging quantization-related issues

- Specific requirements for standard floating point format

- Legacy configurations

Note: For most use cases, NF4 is preferred as it provides better quality with the same memory savings.

Precision type

The numerical format used for calculations during training. This setting balances computation speed, memory usage, and numerical stability.

Available options:

BFloat16

- Precision: 16-bit brain floating point format

- Accuracy: Better numerical stability than Float16 for large models

- Speed: ~2x faster than Float32

- Memory: 2x reduction vs Float32

- Use case: Preferred for training large models (recommended)

Float16

- Precision: 16-bit floating point

- Accuracy: Good for most use cases

- Speed: ~2x faster than Float32

- Memory: 2x reduction vs Float32

- Use case: Standard training with older GPUs

Float32

- Precision: 32-bit floating point

- Accuracy: Highest numerical precision

- Speed: Slowest (baseline)

- Memory: Highest usage

- Use case: Debugging numerical issues, research requiring maximum precision

Recommendation: BFloat16BFloat16 is recommended for VLM training as it provides the speed and memory benefits of 16-bit precision with better numerical stability than Float16, especially important for large models.

Comparison table:

| Precision | Speed | Memory | Stability | Modern GPU support |

|---|---|---|---|---|

| BFloat16 | Fast (2x) | Low | Excellent | NVIDIA Ampere+, AMD MI200+ |

| Float16 | Fast (2x) | Low | Good | Universal |

| Float32 | Baseline (1x) | High | Excellent | Universal |

When to use each precision:

BFloat16 (recommended)

Best for most scenarios:

- Modern GPUs (NVIDIA A100, H100, RTX 30/40 series)

- Training large models (7B+ parameters)

- Balanced speed and stability

- Production training workflows

Why it's better:

- Preserves Float32's exponent range (better for extreme values)

- Reduces gradient underflow/overflow issues

- Widely supported in modern ML frameworks

- Minimal accuracy loss compared to Float32

Float16

Use when:

- Older GPUs without BFloat16 support

- Maximum speed is critical

- Working with smaller models (<7B parameters)

Considerations:

- May encounter numerical instability with very large or small gradients

- Requires gradient scaling for stability

- Slightly more prone to training issues than BFloat16

Float32

Use when:

- Debugging numerical stability issues

- Research requiring maximum precision

- Training is unstable with lower precision

- GPU memory is not a constraint

Tradeoffs:

- 2x slower than Float16/BFloat16

- 2x more memory usage

- Rarely necessary for production training

Hyperparameters

Hyperparameters control the training process dynamics—how the model learns from your data and how quickly it converges.

Epochs

The number of complete passes through your entire training dataset. Each epoch represents one full cycle of training where the model sees every training image once.

How it works:

1 epoch = model sees all training images once

10 epochs = model sees all training images 10 timesExample calculation:

- 100 training images

- Batch size of 2

- 1 epoch = 50 training steps (100 images ÷ 2 per batch)

- 10 epochs = 500 total training steps

Choosing the right number:

Recommended epoch ranges

Small datasets (<100 images):

- Recommended: 100-300 epochs

- Reasoning: More passes needed to learn from limited data

- Watch for: Overfitting after ~200 epochs

Medium datasets (100-1000 images):

- Recommended: 50-150 epochs

- Reasoning: Balanced learning with sufficient data

- Watch for: Convergence plateau around 100 epochs

Large datasets (1000+ images):

- Recommended: 20-100 epochs

- Reasoning: Fewer passes needed with abundant data

- Watch for: Diminishing returns after 50-75 epochs

Signs you need more epochs

Increase epochs when:

- Training loss is still decreasing steadily

- Validation metrics improving each epoch

- Model hasn't converged yet

- Early in experimentation phase

Example: Training loss: epoch 50 = 0.45, epoch 100 = 0.32, epoch 150 = 0.28

- Still improving → continue training

Signs you need fewer epochs

Reduce epochs when:

- Training loss plateaus early

- Validation performance stops improving or degrades (overfitting)

- Training time is excessive

- Model converges quickly

Example: Training loss: epoch 30 = 0.25, epoch 60 = 0.24, epoch 90 = 0.24

- Converged at epoch 30 → reduce to 50 epochs

Pro tip: Use early stoppingMonitor validation metrics during training. If performance stops improving for 10-20 epochs, training can often be stopped early. Create multiple runs with different epoch counts to find the optimal value for your dataset.

Learning rate

Learning rate controls how much the model's parameters change with each training step. It's one of the most critical hyperparameters affecting training success.

How it works:

- High learning rate: Large parameter updates, faster initial learning, risk of instability

- Low learning rate: Small parameter updates, stable but slow learning, may get stuck

- Optimal learning rate: Balances speed and stability for efficient convergence

Typical range: 0.00001 (1e-5) to 0.0001 (1e-4)

Recommended starting values:

| Model size | LoRA Training | Full Finetuning (SFT) |

|---|---|---|

| Small (1-3B) | 0.0001 | 0.00005 |

| Medium (7-13B) | 0.0001 | 0.00003 |

| Large (20-34B) | 0.00005 | 0.00001 |

Learning rate significantly impacts trainingToo high causes training instability and divergence. Too low results in slow training or getting stuck in poor solutions. Start with recommended values and adjust based on training behavior.

Tuning the learning rate:

Learning rate too high (signs and fixes)

Signs:

- Training loss increases or oscillates wildly

- Loss suddenly spikes to very large values

- Model predictions become nonsensical

- Training diverges or produces NaN values

Example:

Epoch 1: loss = 2.3

Epoch 2: loss = 1.8

Epoch 3: loss = 5.7 ← Spike indicates too high

Epoch 4: loss = NaN ← Training divergedFix:

- Reduce learning rate by 2-5x (e.g., 0.0001 → 0.00002)

- Start a new training run with adjusted rate

- Consider using smaller batch size for more stable gradients

Learning rate too low (signs and fixes)

Signs:

- Training loss decreases very slowly

- Progress stalls at high loss values

- Training takes excessively long

- Model underfits the data

Example:

Epoch 10: loss = 2.1

Epoch 20: loss = 2.08

Epoch 30: loss = 2.06 ← Very slow improvement

Epoch 40: loss = 2.04Fix:

- Increase learning rate by 2-3x (e.g., 0.00001 → 0.00003)

- Start a new training run with adjusted rate

- Monitor closely to ensure stability

Learning rate just right (what to expect)

Good training behavior:

- Loss decreases steadily without large spikes

- Occasional small fluctuations are normal

- Converges within expected number of epochs

- Validation metrics improve consistently

Example:

Epoch 5: loss = 2.1

Epoch 10: loss = 1.6

Epoch 15: loss = 1.3 ← Steady improvement

Epoch 20: loss = 1.1

Epoch 25: loss = 0.95Characteristics:

- Smooth loss curve with minor noise

- Clear downward trend

- No sudden spikes or divergence

- Validation performance tracks training improvement

Learning rate schedules:

Advanced: Learning rate scheduling

Learning rate schedules automatically adjust the learning rate during training:

Common schedules:

-

Constant (default):

- Same rate throughout training

- Simple and predictable

- Good for most use cases

-

Linear decay:

- Gradually reduces rate over training

- Helps fine-tune convergence at the end

- Useful for long training runs

-

Cosine annealing:

- Reduces rate following cosine curve

- Smoother decay than linear

- Popular for large model training

When to use schedules:

- Long training runs (100+ epochs)

- Fine-tuning pre-trained models

- Seeking optimal convergence

- Advanced optimization scenarios

Note: Most training workflows work well with constant learning rate. Schedules are an advanced optimization technique.

Batch size

The number of images processed simultaneously in each training step. Batch size affects training speed, memory usage, and model convergence.

How it works:

Batch size 2: Process 2 images → compute gradients → update model

Batch size 4: Process 4 images → compute gradients → update model

Batch size 8: Process 8 images → compute gradients → update modelExample:

- 100 training images, batch size 4

- Steps per epoch: 100 ÷ 4 = 25 steps

- 10 epochs = 250 total training steps

Typical range: 1-16 for VLM training

Recommended starting values:

| Model size | GPU memory 16GB | GPU memory 24GB | GPU memory 40GB+ |

|---|---|---|---|

| Small (1-3B) | 8 | 16 | 32 |

| Medium (7-13B) | 2-4 | 4-8 | 8-16 |

| Large (20-34B) | 1-2 | 2-4 | 4-8 |

Batch size tradeoffsLarger batch sizes:

- Faster training (better GPU utilization)

- More stable gradients

- Higher memory usage

- May reduce model generalization

Smaller batch sizes:

- Lower memory requirements

- Better generalization (more noisy gradients)

- Slower training

- Less stable convergence

Choosing batch size:

Memory-constrained training

When GPU memory is limited:

Start with smallest batch size that trains successfully:

- Try batch size 4

- If out of memory, reduce to 2

- If still issues, try batch size 1

- Consider enabling quantization or using gradient accumulation

Memory optimization strategies:

- Enable quantization (NF4)

- Use gradient accumulation to simulate larger batches

- Choose smaller model size

- Reduce precision type to FP16/BF16

Balancing speed and quality

For optimal training:

Use the largest batch size that:

- Fits in GPU memory comfortably (~80% utilization)

- Maintains stable training (no memory errors)

- Provides reasonable training speed

Practical approach:

- Start with recommended value for your GPU

- Increase until you encounter memory issues

- Reduce by 25-50% for safety margin

- Monitor training stability

Example:

- GPU: 24GB VRAM

- Model: 13B parameters with LoRA

- Test batch sizes: 4 → 8 → 16

- Batch size 16 causes OOM errors

- Final choice: batch size 8 (safe maximum)

When batch size matters less

Batch size is less critical when:

- Using gradient accumulation (can simulate larger batches)

- Training small models with ample GPU memory

- Dataset is large (1000+ images)

Batch size is more critical when:

- GPU memory is constrained

- Training very large models

- Dataset is small (gradient noise matters more)

Gradient accumulation steps

The number of forward passes before updating model weights. This technique simulates larger batch sizes without requiring additional GPU memory.

How it works:

Instead of updating the model after each batch, gradients are accumulated over multiple batches:

Batch size 4, accumulation steps 1 (default):

- Process 4 images → update model immediately

- Effective batch size: 4

Batch size 4, accumulation steps 4:

- Process 4 images → accumulate gradients

- Process 4 images → accumulate gradients

- Process 4 images → accumulate gradients

- Process 4 images → accumulate gradients → update model

- Effective batch size: 16 (4 × 4)Effective batch size formula:

Effective batch size = Batch size × Gradient accumulation stepsTypical values: 1-8 (1 means no accumulation)

Why use gradient accumulation?Gradient accumulation lets you train with larger effective batch sizes when GPU memory is limited. This improves training stability and gradient quality without requiring more memory.

When to use gradient accumulation:

Limited GPU memory

Problem: Want larger batch size but GPU memory is insufficient

Solution: Use gradient accumulation

Example:

- Target effective batch size: 16

- GPU can only handle batch size 4

- Set gradient accumulation steps: 4

- Effective batch size: 4 × 4 = 16 ✓

Tradeoff:

- Training takes longer (4x more forward passes before each update)

- Same memory usage as batch size 4

- Training stability of batch size 16

Improving training stability

Higher effective batch sizes provide:

- More stable gradient estimates

- Smoother loss curves

- Better convergence for large models

- Reduced gradient noise

Recommended combinations:

| Scenario | Batch size | Accumulation steps | Effective batch |

|---|---|---|---|

| Memory constrained | 2 | 4 | 8 |

| Balanced | 4 | 2 | 8 |

| Memory available | 8 | 1 | 8 |

All achieve same effective batch size with different memory/speed tradeoffs.

When NOT to use accumulation

Avoid gradient accumulation when:

- GPU memory is sufficient for desired batch size

- Small datasets where gradient noise aids generalization

- Training is already slow and speed is critical

- Batch size 1-2 is sufficient for your model size

Why avoid unnecessary accumulation:

- Slower training (more forward passes per update)

- Adds complexity without benefit

- Default (accumulation steps = 1) works fine for most cases

Recommended values:

By GPU memory constraint

16GB GPU:

- Batch size 2, accumulation steps 4 (effective: 8)

- Batch size 1, accumulation steps 8 (effective: 8)

24GB GPU:

- Batch size 4, accumulation steps 2 (effective: 8)

- Batch size 2, accumulation steps 4 (effective: 8)

40GB+ GPU:

- Batch size 8, accumulation steps 1 (effective: 8)

- Usually no accumulation needed

Goal: Effective batch size of 8-16 for most VLM training

Optimizer

The optimization algorithm that adjusts the model's parameters to minimize the loss function. The optimizer determines how gradient information is used to update model weights.

Available optimizers:

AdamW

- Full name: Adam with Weight Decay

- How it works: Adaptive learning rates per parameter with decoupled weight regularization

- Best for: Most VLM training scenarios (recommended default)

- Advantages: Better convergence and generalization through decoupled weight decay

Adam

- How it works: Adaptive learning rates per parameter

- Difference from AdamW: Coupled weight decay (less effective regularization)

- Best for: Legacy compatibility, specific research requirements

Recommendation: AdamWUse AdamW for VLM training as it provides better convergence and generalization than Adam. It's the standard optimizer for training transformer-based models.

Optimizer comparison:

| Optimizer | Convergence speed | Memory usage | Generalization | Use case |

|---|---|---|---|---|

| AdamW | Fast | Moderate | Excellent | Default (recommended) |

| Adam | Fast | Moderate | Good | Legacy/compatibility |

When to use each optimizer:

AdamW (recommended)

Use for:

- All standard VLM training (recommended for 95%+ of cases)

- Production models

- Fine-tuning transformer models

- When you want best practices defaults

Advantages:

- Decoupled weight decay improves regularization

- Adaptive learning rates per parameter

- Proven effective for large language and vision models

- Industry standard for transformer training

- Better generalization than Adam

Why it's better than Adam:

- Separates weight decay from gradient-based updates

- More effective regularization

- Better final model performance

- Widely adopted as best practice

Adam

Use for:

- Reproducing older experiments that used Adam

- Compatibility with existing configurations

- Specific research requirements

Note: AdamW is generally preferred over Adam. The main reason to use Adam is backward compatibility with older experiments or when reproducing specific published results that used Adam.

Difference from AdamW:

- Couples weight decay with gradient updates

- Slightly less effective regularization

- May lead to marginally lower performance

Evaluation

Evaluation settings control how the model generates outputs during inference and evaluation. These settings affect response quality, diversity, and computational cost.

Max new tokens

The maximum number of tokens the model can generate in a single response. This setting limits output length to control generation time and computational cost.

How it works:

Tokens are roughly words or subwords:

- "cat" = 1 token

- "running" might be 1-2 tokens

- "The quick brown fox" ≈ 5 tokens

Typical range: 128-1024 tokens

Recommended values:

| Task type | Max new tokens | Reasoning |

|---|---|---|

| Short answers (VQA) | 128-256 | Brief responses sufficient |

| Phrase grounding | 512 | JSON with multiple groundings |

| Detailed descriptions | 512-1024 | Comprehensive captions needed |

Tradeoff: Length vs. SpeedHigher values:

- Allow longer, more detailed responses

- Increase evaluation time per sample

- Higher computational cost

- Risk of repetitive or rambling outputs

Lower values:

- Force concise responses

- Faster evaluation

- Lower computational cost

- May truncate important information

Choosing max new tokens:

For phrase grounding tasks

Recommended: 512 tokens

Phrase grounding outputs include:

- Descriptive caption

- Multiple grounded phrases

- Bounding box coordinates

- JSON structure

Example output size:

{

"phrase_grounding": {

"sentence": "A red car parked next to two people wearing blue shirts on the sidewalk near a green tree",

"groundings": [

{"phrase": "A red car", "grounding": [[120,340,580,670]]},

{"phrase": "two people", "grounding": [[600,280,750,720],[780,290,920,710]]},

{"phrase": "the sidewalk", "grounding": [[0,650,1024,900]]},

...

]

}

}Typical length: 200-400 tokens

Setting 512 provides comfortable margin without waste.

For visual question answering

Recommended: 128-256 tokens

VQA responses are typically:

- 1-3 sentences

- 20-80 tokens

- Focused and concise

Example responses:

- Short: "There are three dogs in the image" (~8 tokens)

- Medium: "The image shows three golden retrievers playing in a park on a sunny day" (~15 tokens)

- Long: "There are three dogs visible: two golden retrievers playing with a ball in the foreground and one black labrador resting under a tree in the background" (~30 tokens)

Setting 256 allows detailed answers without encouraging verbosity.

For custom tasks

Consider:

-

Measure typical output length:

- Generate sample outputs

- Count tokens (roughly 1 token per word)

- Use 1.5-2x the typical length as max

-

Balance quality and cost:

- Longer limits → more flexibility but slower

- Shorter limits → faster but may truncate

- Start conservative, increase if needed

-

Monitor for truncation:

- If outputs frequently hit the limit, increase

- If outputs are always much shorter, decrease

Top K results

Limits token sampling to the K most probable next tokens. This setting controls output diversity by restricting the model's choices to the most likely options.

How it works:

At each generation step:

- Model computes probability for all possible next tokens

- Top K keeps only the K most probable tokens

- Model samples from these K tokens

Example:

Top K = 50:

- All tokens ranked by probability

- Keep top 50 most probable

- Sample next token from these 50

- Ignore all other possibilities

Top K = 5:

- Keep only top 5 most probable

- Very focused, deterministic output

- Less diversityTypical range: 1-100

Recommended starting value: 50

How Top K affects outputsLower values (10-30):

- More focused and deterministic

- Less diversity and creativity

- More consistent outputs

- Risk of repetition

Higher values (50-100):

- More diversity and variation

- Less predictable

- Broader vocabulary usage

- May reduce coherence if too high

Choosing Top K:

Deterministic tasks (object detection, VQA)

Recommended: 50

For tasks requiring factual accuracy:

- Object detection

- Bounding box generation

- Answering specific questions

- Structured output generation

Why 50 works well:

- Enough diversity to avoid repetition

- Focused enough for accurate outputs

- Balances creativity and precision

Example: "How many cars are in the image?"

- Lower K: "There are 3 cars" (consistent)

- Higher K: Various phrasings but same count

Creative tasks (descriptions, captions)

Recommended: 70-100

For tasks benefiting from diversity:

- Detailed scene descriptions

- Creative captions

- Varied phrasing

Why higher K helps:

- More vocabulary variety

- Diverse expression styles

- Less repetitive phrasing

Example: "Describe this scene"

- Lower K: More standardized descriptions

- Higher K: More varied and creative language

Interaction with Top P

Top K and Top P work together:

Both limit the sampling pool:

- Top K: "Keep top 50 tokens"

- Top P: "Keep tokens until cumulative probability reaches 0.95"

In practice:

- Set Top K as hard upper limit

- Top P provides dynamic threshold

- Both constrain sampling for quality

Recommended combination:

- Top K: 50

- Top P: 0.95

- Works well for most scenarios

Top P

Nucleus sampling threshold that selects tokens whose cumulative probability reaches P. This dynamic approach to limiting token choices adapts based on the confidence distribution.

How it works:

Instead of fixed K tokens, select tokens until cumulative probability reaches threshold:

Top P = 0.9:

- Sort all tokens by probability

- Add tokens until cumulative probability ≥ 0.9

- Sample from this dynamic set

Example:

Token A: 40% probability

Token B: 30% probability

Token C: 15% probability

Token D: 10% probability

Others: 5% probability

With Top P = 0.9:

- Keep A (40% cumulative)

- Keep B (70% cumulative)

- Keep C (85% cumulative)

- Keep D (95% cumulative ≥ 90% ✓)

- Result: Sample from {A, B, C, D}Typical range: 0.0-1.0 (commonly 0.90-0.95)

Recommended starting value: 0.95

Top P vs Top KTop K: Fixed number of tokens (e.g., always 50) Top P: Variable number based on confidence distribution

Top P adapts:

- When model is confident: few tokens needed to reach P

- When model is uncertain: more tokens needed to reach P

Understanding Top P values:

High Top P (0.95-1.0)

More diverse outputs:

- Includes less probable tokens

- Greater output variety

- More creative and unpredictable

- Risk of lower coherence

Use when:

- Creative description tasks

- Varied phrasing desired

- Output diversity important

- Repetition is a problem

Example: Top P = 0.95

- Allows more vocabulary choices

- Includes less common but valid alternatives

- Increases expression variety

Medium Top P (0.85-0.94)

Balanced outputs (recommended for most tasks):

- Moderate diversity

- Maintains coherence

- Focused but not repetitive

- Good default choice

Use when:

- Standard VLM tasks

- Balance needed between quality and diversity

- First-time configuration

- Phrase grounding and VQA

Example: Top P = 0.90

- Focuses on high-probability tokens

- Allows some variation

- Prevents most low-quality choices

Low Top P (0.7-0.84)

Focused outputs:

- Very deterministic

- Minimal diversity

- Highly consistent

- Risk of repetition

Use when:

- Maximum consistency required

- Factual accuracy critical

- Template-like outputs desired

- Debugging generation issues

Example: Top P = 0.80

- Very focused token selection

- Predictable outputs

- May become repetitive

Recommended combinations:

Standard phrase grounding

Configuration:

- Top K: 50

- Top P: 0.95

- Temperature: 1.0

Why this works:

- Allows diverse phrasing for captions

- Maintains focus for accurate groundings

- Balances creativity and precision

Factual VQA

Configuration:

- Top K: 50

- Top P: 0.90

- Temperature: 0.7

Why this works:

- Focuses on most probable (accurate) answers

- Reduces creative but potentially incorrect responses

- Maintains consistency across similar questions

Creative descriptions

Configuration:

- Top K: 70

- Top P: 0.95

- Temperature: 1.2

Why this works:

- High diversity in vocabulary

- Varied expression styles

- Creative but coherent outputs

Sampling temperature

Controls randomness in token selection during generation. Temperature shapes the probability distribution over possible next tokens, directly affecting output diversity and creativity.

How it works:

Temperature scales the probability distribution:

Low temperature (0.3):

- Sharpens distribution → more deterministic

- High-probability tokens become even more likely

- Low-probability tokens become even less likely

High temperature (1.5):

- Flattens distribution → more random

- Probabilities become more uniform

- Low-probability tokens get more chancesExample:

Original probabilities:

Token A: 40%

Token B: 30%

Token C: 20%

Token D: 10%

Temperature = 0.5 (low):

Token A: 60% ← More focused

Token B: 25%

Token C: 10%

Token D: 5%

Temperature = 1.5 (high):

Token A: 35% ← More uniform

Token B: 32%

Token C: 22%

Token D: 11%Typical range: 0.1-2.0 (commonly 0.7-1.3)

Recommended starting value: 1.0 (neutral)

Temperature intuitionThink of temperature like confidence vs. exploration:

Low temperature (0.3-0.7): "Play it safe, use most likely words"

Medium temperature (0.8-1.2): "Balance safety with some variation"

High temperature (1.3-2.0): "Be creative, try different approaches"

Choosing temperature:

Low temperature (0.3-0.7)

Focused, deterministic outputs:

Use for:

- Factual question answering

- Structured output generation

- Consistency critical tasks

- Bounding box generation

Characteristics:

- Very predictable outputs

- High consistency

- Low diversity

- Risk of repetition with very low values

Example use case: Counting objects in images:

- Temperature 0.5

- Consistent "There are X objects" format

- Minimal phrasing variation

- Focus on accuracy

Recommended values:

- VQA (factual): 0.7

- Object counting: 0.5

- Structured outputs: 0.6

Medium temperature (0.8-1.2)

Balanced outputs (recommended for most tasks):

Use for:

- Standard VLM tasks

- Phrase grounding

- General image understanding

- Most production scenarios

Characteristics:

- Good balance of quality and diversity

- Natural-sounding outputs

- Appropriate variation

- Reliable performance

Example use case: Phrase grounding captions:

- Temperature 1.0

- Varied but accurate descriptions

- Natural language flow

- Consistent quality

Recommended values:

- Phrase grounding: 1.0

- VQA (descriptive): 1.0

- General tasks: 0.9-1.1

High temperature (1.3-2.0)

Creative, diverse outputs:

Use for:

- Creative image descriptions

- Multiple phrasing alternatives

- Exploring diverse generations

- Reducing repetition

Characteristics:

- High output diversity

- Creative language use

- Less predictable

- Risk of incoherence if too high

Example use case: Creative scene descriptions:

- Temperature 1.5

- Varied vocabulary and phrasing

- Multiple perspectives

- Interesting but potentially less consistent

Recommended values:

- Creative descriptions: 1.3-1.5

- Diverse generations: 1.4

- Maximum diversity: 1.6-1.8

Caution: Values above 1.5 may reduce quality

Temperature interactions:

Combining with Top K and Top P

Temperature works with Top K and Top P:

Processing order:

- Temperature: Reshapes probability distribution

- Top K: Limits to K most probable tokens

- Top P: Limits to cumulative probability threshold

- Sample: Choose next token from remaining options

Recommended combinations:

For factual tasks:

Temperature: 0.7 (focused)

Top K: 50 (moderate limit)

Top P: 0.90 (focused sampling)

→ Deterministic, accurate outputsFor balanced tasks:

Temperature: 1.0 (neutral)

Top K: 50 (moderate limit)

Top P: 0.95 (balanced sampling)

→ Natural, reliable outputsFor creative tasks:

Temperature: 1.3 (creative)

Top K: 70 (wider limit)

Top P: 0.95 (diverse sampling)

→ Varied, interesting outputsSampling repetition penalty

Reduces the likelihood of repeating tokens that have already been generated. This penalty helps create more diverse and natural-sounding outputs by discouraging repetitive patterns.

How it works:

After generating each token:

- Track which tokens have been used

- Reduce probability of already-used tokens

- Higher penalty = stronger discouragement

Typical range: 1.0-2.0

- 1.0: No penalty (default behavior)

- 1.05: Gentle penalty

- 1.1: Moderate penalty (recommended)

- 1.3: Strong penalty

- 1.5+: Very strong penalty (may hurt coherence)

Recommended starting value: 1.05

How repetition penalty worksExample with penalty 1.2:

If "car" was already generated:

- Original probability: 10%

- After penalty: 10% ÷ 1.2 = 8.3%

Repeated use further reduces probability:

- Second use: 8.3% ÷ 1.2 = 6.9%

- Third use: 6.9% ÷ 1.2 = 5.8%

The model increasingly favors alternative words.

Choosing repetition penalty:

Low penalty (1.0-1.1)

Minimal repetition discouragement:

Use for:

- Natural language where repetition is acceptable

- Technical descriptions requiring specific terminology

- Structured outputs with repeated elements

- Default starting point

Value 1.0 (no penalty):

- Natural repetition patterns

- May repeat common words naturally

- Good for most standard tasks

Value 1.05 (gentle penalty):

- Slight preference for variety

- Maintains natural language flow

- Recommended default for most VLM tasks

Example: Phrase grounding captions naturally repeat:

- "The red car next to a blue car" (car repeated appropriately)

- Penalty 1.05 allows this natural repetition

Moderate penalty (1.1-1.3)

Balanced repetition control (recommended):

Use for:

- Long-form descriptions

- Reducing noticeable repetition

- Creative text generation

- When repetition becomes problematic

Value 1.1:

- Good balance for most use cases

- Reduces obvious repetition

- Maintains coherence

Value 1.2:

- Stronger variety encouragement

- For longer outputs

- When 1.1 shows too much repetition

Example: Without penalty: "The image shows a person wearing a hat. The person is standing next to another person. Each person has a bag."

With penalty 1.2: "The image shows a person wearing a hat, standing next to someone else. Both individuals carry bags." → More varied vocabulary

High penalty (1.3+)

Strong repetition avoidance:

Use for:

- Creative writing scenarios

- Extreme repetition problems

- Experimental settings

Caution:

- May force unnatural word choices

- Can reduce coherence

- Might avoid necessary repetitions

- Use only when repetition is severe

Value 1.5:

- Very strong penalty

- Significantly alters word choice

- Risk of awkward phrasing

Example: With very high penalty: "The car is red" → hard to repeat "car" → forced to use "vehicle", "automobile", "auto" even when "car" is most natural

Generally not recommended unless repetition is extreme.

Common scenarios:

Phrase grounding outputs

Recommended: 1.05 (gentle)

Phrase grounding naturally involves:

- Repeating object names in groundings

- Similar phrasing across detections

- Structured JSON format

Why gentle penalty:

- Allow natural terminology repetition

- Maintain accurate object references

- Preserve structured output format

Example:

{

"groundings": [

{"phrase": "a red car", ...},

{"phrase": "a blue car", ...},

{"phrase": "the parked cars", ...}

]

}→ "car" repeated appropriately

Visual question answering

Recommended: 1.05-1.1

VQA responses are typically:

- Short (1-3 sentences)

- Focused answers

- Limited repetition risk

Why light penalty:

- Short outputs have less repetition

- Focus on accuracy over variety

- Natural answer patterns acceptable

Example question: "What color are the cars?"

Answer with 1.05: "There are two cars: a red car and a blue car." → Natural repetition of "car" acceptable

Long descriptions

Recommended: 1.1-1.2

Longer outputs risk more repetition:

- Multiple sentences

- Describing many objects

- Detailed scene understanding

Why moderate penalty:

- Encourages vocabulary variety

- Maintains natural flow

- Prevents monotonous phrasing

Example without penalty: "The scene shows a person in a red shirt. Next to the person is another person in a blue shirt. Behind these two people is a third person."

Example with penalty 1.15: "The scene shows a person in a red shirt, beside someone wearing blue. A third individual stands behind them." → More varied phrasing

Best practices

Start with recommended defaults

Recommended starting configuration:

Model Options:

- Architecture size: Medium (7-13B) or small (1-3B) for testing

- Training mode: LoRA Training

- Quantization: NF4

- Precision type: BFloat16

Hyperparameters:

- Epochs: 100 (adjust based on dataset size)

- Learning rate: 0.0001 (for LoRA Training)

- Batch size: 4 (adjust for your GPU)

- Gradient accumulation: 1

- Optimizer: AdamW

Evaluation:

- Max new tokens: 512

- Top K: 50

- Top P: 0.95

- Temperature: 1.0

- Repetition penalty: 1.05

Why these defaults:

- Balanced across speed, quality, and resource usage

- Work well for most VLM tasks

- Safe starting point for experimentation

- Proven effective in production

Iterate based on results

Systematic tuning approach:

- Start with defaults (above configuration)

- Train initial model and evaluate performance

- Identify issues from training behavior:

- Slow convergence → adjust learning rate

- Memory errors → reduce batch size or enable quantization

- Poor quality → try larger model

- Overfitting → reduce epochs or add regularization

- Change one thing at a time for clear cause-effect

- Compare results across runs using evaluation metrics

- Refine iteratively until satisfactory performance

Example iteration:

Run 1 (defaults):

- Result: Good quality but slow convergence

Run 2 (adjust learning rate):

- Learning rate: 0.0001 → 0.0002

- Result: Faster convergence, similar quality

Run 3 (adjust batch size):

- Batch size: 4 → 8

- Result: Stable training, faster per-epoch time

Run 4 (final tuning):

- Epochs: 100 → 75 (converged earlier)

- Result: Optimal configuration found

Match settings to your resources

For limited GPU memory (8-16GB):

Architecture: Small (1-3B)

Training mode: LoRA Training

Quantization: NF4

Precision: BFloat16

Batch size: 1-2

Gradient accumulation: 4-8For standard GPU (24-32GB):

Architecture: Medium (7-13B)

Training mode: LoRA Training

Quantization: NF4

Precision: BFloat16

Batch size: 4-8

Gradient accumulation: 1-2For high-end GPU (40-80GB):

Architecture: Medium to Large

Training mode: LoRA Training or Full Finetuning (SFT)

Quantization: NF4 or FP4

Precision: BFloat16

Batch size: 8-16

Gradient accumulation: 1Monitor training behavior

Watch for these patterns:

Loss curves:

- Smooth decrease: Good configuration ✓

- Erratic spikes: Learning rate too high

- Plateau early: Learning rate too low or model capacity insufficient

- Overfitting: Training loss decreases but validation increases

Memory usage:

- Consistent 70-80% GPU utilization: Optimal ✓

- Frequent OOM errors: Reduce batch size or enable quantization

- Low utilization (<50%): Can increase batch size

Training speed:

- Consistent step times: Good ✓

- Increasing step times: Memory/swap issues

- Very slow: Consider larger batch size or better GPU

Document your configurations

Track successful configurations:

Keep records of:

- Which settings worked for which tasks

- Resource requirements (GPU memory, training time)

- Performance metrics achieved

- Issues encountered and solutions

Naming convention for workflows:

[Task]-[Model Size]-[Key Settings]-v[Number]

Examples:

- "DefectDetection-7B-LoRA-NF4-v1"

- "ProductRecog-13B-LoRA-FastConv-v2"

- "QualityControl-3B-FullFT-HighAcc-v1"Benefits:

- Quickly identify successful configurations

- Share settings with team members

- Reproduce results reliably

- Track iteration progress

Common questions

Which settings have the biggest impact on training quality?

Most critical settings for quality:

-

Model architecture size (35% impact)

- Larger models generally achieve higher quality

- Most important factor for capacity

-

Learning rate (25% impact)

- Correct learning rate ensures convergence

- Too high/low severely impacts results

-

Epochs (20% impact)

- Sufficient epochs required to converge

- More isn't always better (overfitting risk)

-

Dataset quality and size (not a setting, but 40% impact)

- High-quality annotations critical

- More data generally helps

Less critical for quality (but affect speed/resources):

- Training mode (LoRA Training vs Full Finetuning): Similar quality

- Quantization: Minimal quality impact

- Batch size: Affects stability more than final quality

- Evaluation settings: Only affect inference, not training

My training is too slow. What should I change?

Speed optimization strategies:

1. Reduce model size:

- Large (20-34B) → Medium (7-13B): 2-3x faster

- Medium (7-13B) → Small (1-3B): 2-4x faster

2. Enable optimizations:

- Use LoRA Training instead of Full Finetuning (SFT): 2-3x faster

- Use NF4 quantization: 1.5-2x faster

- Use BFloat16 precision: 2x faster vs Float32

3. Increase batch size:

- Batch 2 → Batch 4: ~1.5x faster

- Batch 4 → Batch 8: ~1.3x faster

- Limited by GPU memory

4. Reduce epochs:

- Monitor for early convergence

- Stop when validation metrics plateau

- May be training longer than needed

5. Reduce gradient accumulation:

- Only if memory allows

- Accumulation adds overhead

Typical speedup example:

- Before: Large model, Full Finetuning (SFT), batch 2 → 10 hours

- After: Medium model, LoRA Training, NF4, batch 4 → 2-3 hours

- ~3-4x total speedup with minimal quality impact

How do I know if my settings are good?

Indicators of good configuration:

Training behavior:

- Loss decreases smoothly

- No frequent spikes or NaN values

- Reasonable training speed

- GPU utilization 70-90%

- No out-of-memory errors

Results quality:

- Validation metrics improve over training

- Test set performance meets requirements

- Generated outputs are coherent and accurate

- Model generalizes to new examples

Resource usage:

- Training completes in acceptable time

- GPU memory usage stable

- Costs within budget

Comparison approach:

- Train with default settings (baseline)

- Evaluate quality metrics

- Adjust one setting at a time

- Compare metrics to baseline

- Keep changes that improve results

Should I use the same settings for all my projects?

Start similar, then customize:

Reusable baseline:

- Core settings (LoRA Training, NF4, AdamW) work across projects

- Training approach generalizes well

- Resource optimizations apply universally

Project-specific tuning:

- Epochs: Depends on dataset size

- Learning rate: May need adjustment per task

- Batch size: Constrained by your GPU

- Model size: Depends on task complexity

Best practice:

- Create a "baseline" workflow with proven settings

- Clone it for new projects

- Adjust task-specific parameters:

- System prompt

- Dataset

- Epochs based on data size

- Fine-tune if baseline doesn't perform well

Example:

Baseline workflow:

- 7B model, LoRA Training, NF4, BFloat16

- Batch 4, AdamW, LR 0.0001

- Works for most detection tasks

Project A (100 images):

- Clone baseline

- Adjust: Epochs 150 (small dataset)

Project B (1000 images):

- Clone baseline

- Adjust: Epochs 75 (large dataset)

- Adjust: Batch 8 (more memory available)

When should I use Full Finetuning (SFT) instead of LoRA Training?

Use Full Finetuning (SFT) when:

-

Domain is drastically different:

- Medical/microscopy images (if base model trained on natural images)

- Satellite/aerial imagery

- Specialized visual domains

-

Maximum quality is critical:

- Production systems with strict accuracy requirements

- Research requiring state-of-the-art results

- When LoRA Training results are insufficient

-

Resources are abundant:

- Access to high-end GPUs (A100, H100)

- Training time is not a constraint

- Budget allows for higher compute costs

Stick with LoRA Training when:

-

Standard computer vision tasks:

- Object detection

- Classification

- Quality control

- Most production applications

-

Limited resources:

- Consumer GPUs

- Time constraints

- Budget limitations

-

Iterating quickly:

- Development phase

- Prototyping

- A/B testing different approaches

Reality: 90%+ of use cases work excellently with LoRA Training. Try LoRA Training first, only switch to Full Finetuning (SFT) if results are insufficient and you have the resources.

My model generates repetitive outputs. How do I fix this?

Solutions ranked by effectiveness:

1. Increase repetition penalty (first try):

- Current: 1.05 → Try: 1.1 or 1.15

- Directly addresses repetition

- Usually most effective solution

2. Adjust temperature:

- Current: 1.0 → Try: 1.2

- Increases output diversity

- More creative word choices

3. Increase Top P:

- Current: 0.90 → Try: 0.95

- Allows more token variety

- Broader vocabulary usage

4. Increase Top K:

- Current: 50 → Try: 70-100

- Widens sampling pool

- More diverse token selection

5. Training-level solutions (if inference changes don't help):

- Increase dataset diversity

- Add more varied training examples

- Adjust system prompt to encourage variety

Typical fix:

Before:

- Temperature: 1.0

- Repetition penalty: 1.05

- Result: "The car is red. The car is large. The car is parked."

After:

- Temperature: 1.1

- Repetition penalty: 1.15

- Result: "The vehicle is red and large, parked near the building."Try adjustments incrementally—don't change all at once.

What's the difference between batch size and gradient accumulation?

Both affect effective batch size, but differently:

Batch size:

- Number of images processed simultaneously

- Limited by GPU memory

- Higher = faster training (better GPU utilization)

- Each batch computes gradients, model updates immediately

Gradient accumulation:

- Number of batches before updating model

- Not limited by GPU memory

- Higher = simulates larger batches without extra memory

- Gradients accumulated across batches, then single update

Example comparison:

Configuration A:

- Batch size: 8

- Gradient accumulation: 1

- Effective batch: 8

- Updates per epoch: dataset_size / 8

- Speed: Fast (8 images at once)

- Memory: High (8 images in GPU)

Configuration B:

- Batch size: 2

- Gradient accumulation: 4

- Effective batch: 8 (same as A)

- Updates per epoch: dataset_size / 8 (same as A)

- Speed: Slower (only 2 images at once, but 4x more forward passes)

- Memory: Low (only 2 images in GPU)

When to use which:

Prefer higher batch size (less accumulation):

- When GPU memory allows

- For faster training

- Simpler configuration

Use gradient accumulation:

- When GPU memory is limited

- To simulate larger batches than GPU allows

- To improve training stability without upgrading GPU

Best practice: Use largest batch size that fits in memory, then add accumulation if you need larger effective batch sizes.

Next steps

After configuring your model settings:

Continue workflow configuration

- Configure your system prompt — Define VLM task instructions

- Configure your dataset — Set up data splitting

- Create a workflow — Complete workflow setup

Start training

- Manage runs — Launch training and monitor progress

- Monitor a run — Track training metrics in real-time

- Evaluate a model — Assess performance on test data

Optimize performance

- Configure training settings — Advanced optimization options

- View resource usage — Monitor compute and storage costs

Additional resources

Training guides

- Create a training project — Set up training projects

- Train a model — Complete training workflow

- Configure training settings — Advanced parameters

Model configuration

- Configure your model — Model selection and setup

- Configure your dataset — Dataset splitting

- Configure your system prompt — Task instructions

Concept guides

- Phrase grounding — Understanding visual grounding

- Visual question answering — Understanding VQA

- Glossary — VLMOps terminology reference

Quickstart

- Quickstart: Train a model — Fast-track training guide

- Quickstart: Create a workflow — Streamlined workflow setup

Related resources

- Model architectures — Compare VLM architectures and sizes

- Configure your model — Complete model configuration overview

- Create a workflow — Combine model settings into workflows

- Configure training settings — Set checkpoint strategy and GPU

- Train a model — Complete training workflow guide

- Evaluate a model — Assess model performance

- System prompts — Define VLM behavior

- Dataset configuration — Set train-test split

- Resource usage — Understanding Compute Credits

- Quickstart — End-to-end training tutorial

- Vi SDK — Python SDK for model management

- Contact us — Get help from the Datature team

Need help?

We're here to support your VLMOps journey. Reach out through any of these channels:

Updated about 1 month ago