Evaluate a Model

Review training results, analyze metrics, and assess model performance across different checkpoints.

After training completes, evaluate your model's performance using comprehensive metrics, visual predictions, and detailed logs. Evaluation helps you understand how well your VLM learned from training data and performs on validation examples.

PrerequisitesBefore evaluating a model, ensure you have:

- A completed training run (status: Finished)

- Access to the training project containing the run

Access training results

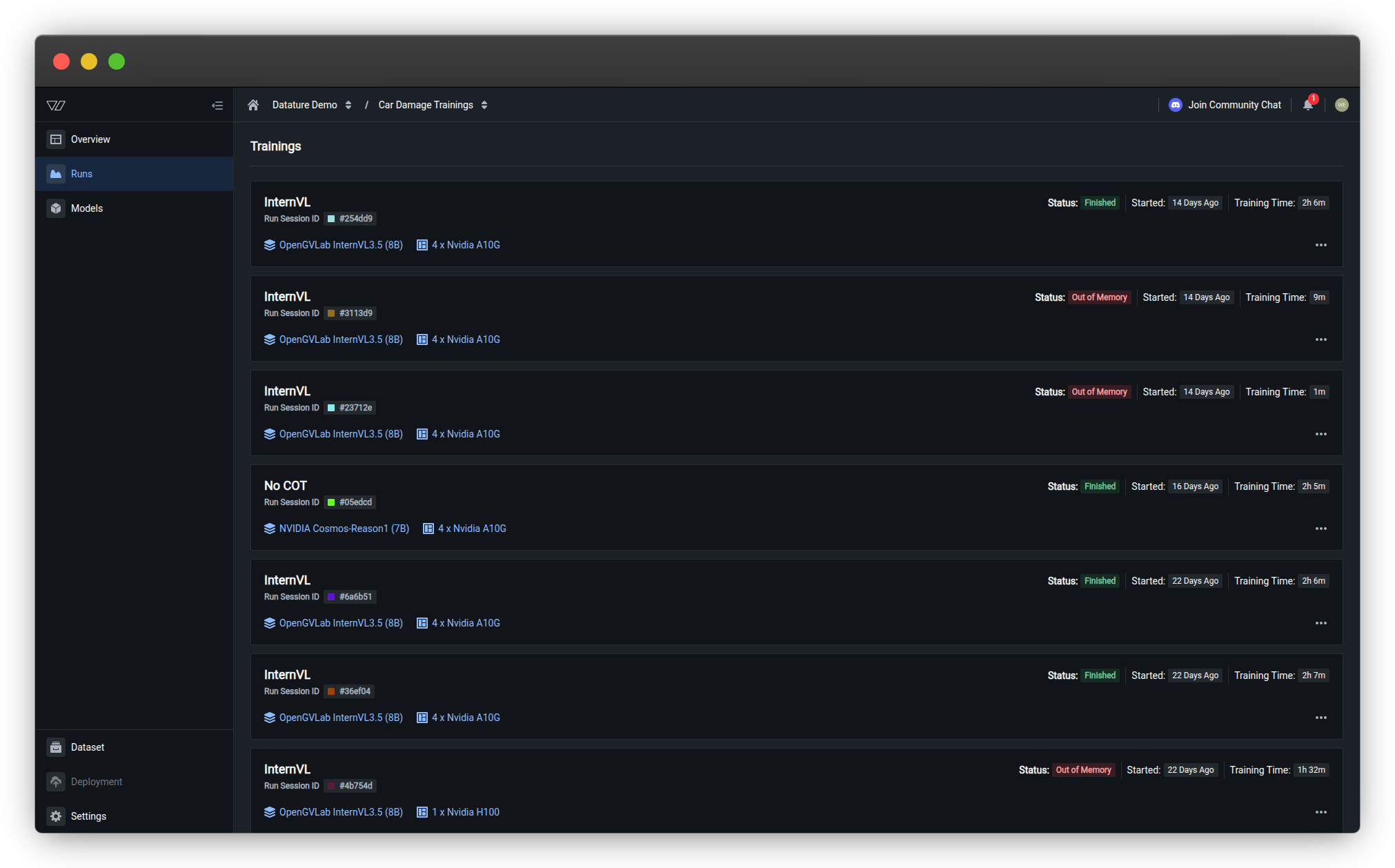

Training results are organized by run in the Runs section of your training project.

Navigate to runs

- Open your training project from the Training section

- Click Runs in the left sidebar

The Runs page displays all training sessions with key information:

| Column | Description |

|---|---|

| Name | Workflow name and Run Session ID |

| Status | Current run state (see all statuses) |

| Started | How long ago the run began |

| Training Time | Total duration of the training session |

| Model & GPU | Architecture and hardware configuration used |

Common statuses:

- ✅ Finished — Training completed successfully

- 🔄 Running — Currently training

- ⚠️ Out of Memory — GPU memory exhausted; needs troubleshooting

- ❌ Failed — Error occurred; check logs for details

- 🚫 Killed — Manually stopped by user

- 💳 Out of Quota — Compute Credits depleted; refill to resume

Open a training run

Click on any run to view its detailed results. Each run provides three tabs for analyzing different aspects of performance.

Evaluation components

Track training progress and understand run states from Finished to Out of Memory, with troubleshooting steps

View loss curves, evaluation metrics (F1, IoU, BLEU, BERTScore), and hyperparameters

Compare ground truth annotations with model predictions side-by-side across checkpoints

Review detailed training logs, debug errors, and troubleshoot failed runs

Quick evaluation workflow

Follow these steps to thoroughly evaluate your trained model:

1. Check run status

Verify training completed successfully or identify errors:

- Finished → Proceed to view metrics

- Out of Memory → Troubleshoot GPU memory issues

- Failed → Check error details and logs

- Out of Quota → Refill Compute Credits to resume

2. Review metrics

Analyze quantitative performance measurements:

- Loss curves — Verify model converged successfully

- Evaluation metrics — Assess accuracy for your task type

- Hyperparameters — Document configuration for reproducibility

3. Inspect predictions visually

Compare model outputs against ground truth:

- View side-by-side comparisons of annotations

- Navigate through evaluation checkpoints

- Identify systematic errors and failure patterns

4. Analyze logs (if issues found)

Dig deeper into training behavior:

- Review training steps and epochs

- Identify error patterns and warnings

- Troubleshoot configuration issues

Common evaluation tasks

Compare multiple runs

To identify best configuration:

- Document metrics from each run in a comparison table

- Note which hyperparameters changed between runs

- Compare visual predictions on the same evaluation specimens

- Select configuration with best performance for your requirements

Understand overfitting

Signs your model is overfitting:

- Training loss decreases but validation metrics plateau or degrade

- Large gap between train and validation performance

- Model performs perfectly on training images but poorly on new examples

Troubleshoot failed runs

Step-by-step diagnostic process:

- Check run status for error type

- View error details in Training Progress section

- Review logs for complete error messages

- Apply fixes based on error pattern

- Retry with adjusted configuration

Determine if model is production-ready

Evaluation criteria:

- ✅ Metrics meet your accuracy requirements

- ✅ Visual predictions show consistent quality

- ✅ Model generalizes to diverse validation examples

- ✅ False positive/negative rates are acceptable

- ✅ Performance stable across multiple runs

What's next?

Export your trained model for deployment or external use

Iterate on your training configuration to improve results

Organize, delete, and track your training runs

If your run failed:

- Troubleshoot errors — Follow diagnostic steps

- Fix Out of Memory — Reduce batch size or upgrade GPU

- Monitor runs — Track progress and understand run statuses

- Contact support — Get help from our team

Related resources

- Train a model — Complete training workflow guide

- Monitor a run — Track training progress in real-time

- Configure training settings — Set checkpoint strategy and GPU hardware

- Resource usage — Understanding Compute Credits and GPU costs

- Vi SDK models — Programmatic model inference and evaluation

- Metrics — Understand training metrics and loss curves

- Advanced evaluation — View visual predictions and comparisons

- Logs — Review training logs and debug errors

- Manage runs — Kill or delete runs

- Download a model — Export trained models for deployment

- Configure your model — Select model architecture and settings

- Quickstart — End-to-end training tutorial

- Vi SDK — Python SDK for programmatic access

Need help?

We're here to support your VLMOps journey. Reach out through any of these channels:

Updated about 1 month ago